第一章 rke部署k8s集群

机器https://blog.csdn.net/godservant/article/details/80895970

安装docker

四台机器均安装docker,版本要求1.11.x 1.12.x 1.13.x 17.03.x

1 2 3 4 5 6 7 8 9 10 11 12 chmod +x docker-composemv docker-compose /usr/bin/enable docker"insecure-registries" :["0.0.0.0/0" ]

运行环境

软件

版本/镜像

备注

OS

RHEL 7.2

Docker

1.12.6

RKE

v0.0.12-dev

kubectl

v1.8.4

Kubernetes

rancher/k8s:v1.8.3-rancher2

canal

quay.io/calico/node:v2.6.2

quay.io/calico/cni:v1.11.0

quay.io/coreos/flannel:v0.9.1

etcd

quay.io/coreos/etcd:latest

alpine

alpine:latest

Nginx proxy

rancher/rke-nginx-proxy:v0.1.0

Cert downloader

rancher/rke-cert-deployer:v0.1.1

Service sidekick

rancher/rke-service-sidekick:v0.1.0

kubedns

gcr.io/google_containers/k8s-dns-kube-dns-amd64:1.14.5

dnsmasq

gcr.io/google_containers/k8s-dns-dnsmasq-nanny-amd64:1.14.5

Kubedns sidecar

gcr.io/google_containers/k8s-dns-sidecar-amd64:1.14.5

Kubedns autoscaler

gcr.io/google_containers/cluster-proportional-autoscaler-amd64:1.0.0

部署k8s集群 创建rke用户并设置rke的ssh免密 su - rke(只要是集群节点,就需要做免密,包括自己)

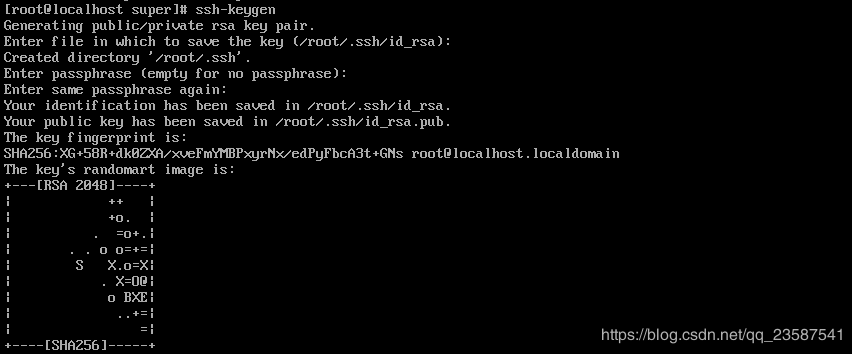

1、生成默认格式的密匙key,此过程会在/root/.ssh/文件件夹下生成id_rsa(私钥)和id_rsa.pub(公钥)。

查看/root/.ssh/目录

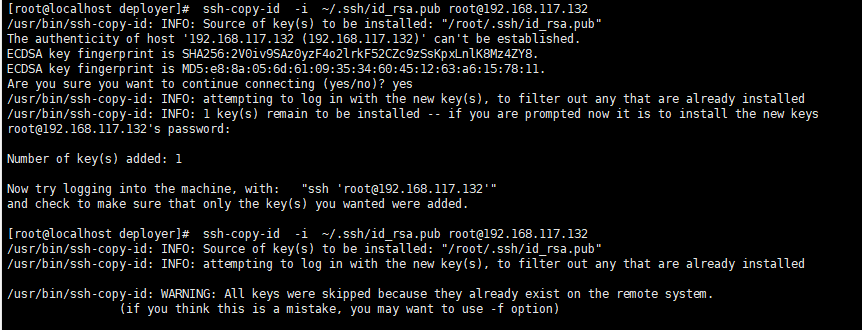

2、将公钥id_rsa.pub复制到远程主机/root/.ssh/文件中,并且重命名为authorized_keys。(此处以远程主机ip为192.168.17.130举例)

1 ssh-copy-id -i ~/.ssh/id_rsa.pub rke@192.168.17.130

关闭swap分区 Kubelet运行是需要worker节点关闭swap分区,执行以下命令关闭swap分区

1)永久禁用swap

可以直接修改/etc/fstab文件,注释掉swap项

2)临时禁用

将rke用户加入到docker用户组 1 2 3 usermod -aG docker <user_name>

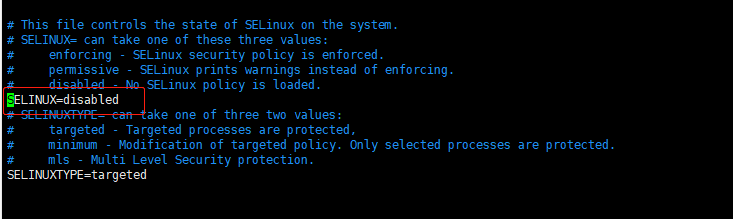

关闭内核防火墙

下载rke和kubectl (1)rke最新版本下载: https://github.com/rancher/rke/releases/ , 下载rke_linux-amd64

1 2 3 mv rke_linux-amd64 rkechmod +x rkemv rke /usr/bin/

(2)Kubectl下载:https://storage.googleapis.com/kubernetes-release/release/v1.7.0/bin/darwin/amd64/kubectl

1 2 chmod +x kubectlmv kubectl /usr/bin/

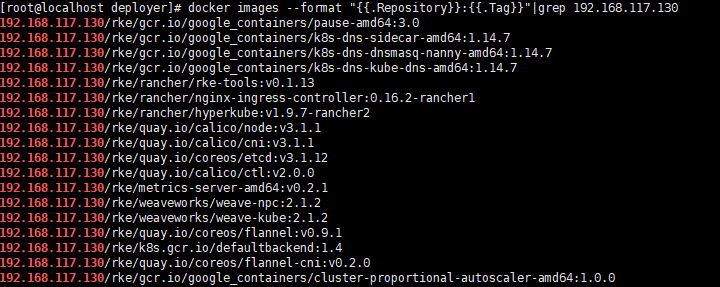

部署私有容器镜像仓库harbor 首先将搭建集群所需的镜像上传到公有harbor上.在镜像所在机器保存特定标签的镜像

1 2 3 docker images --format "{{.Repository}}:{{.Tag}}" \|grep 192.168.17.130"{{.Repository}}:{{.Tag}}" |grep 192.168.17.130)

所有镜像下载链接: https://pan.baidu.com/s/1flpjyrVHX283f-t0b7RFtQ 提取码: ubct

修改hosts文件(非必须) 1 2 3 4 5 6 vim /etc/hots

编辑集群配置文件cluster.yml 可以直接在主机的rke用户下执行: ./rke config 命令, 生成配置文件: cluster.yml 。本次为了方便,我直接上传cluster.yml,具体内容如下:

创建cluster.yml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 "192.168.17.129" "22" "" "" "" "192.168.17.130" "22" "" "" "" "192.168.17.131" "22" "" "" "" "" "" "" "" "" false "" "" "" "" false "" "" "" "KUBELET_SYSTEM_PODS_ARGS=--pod-manifest-path=/etc/kubernetes/manifests --allow-privileged=true --fail-swap-on=false" ]"" false "" "" "" false false "" "" "" "" "" "" "" "" "" "" ""

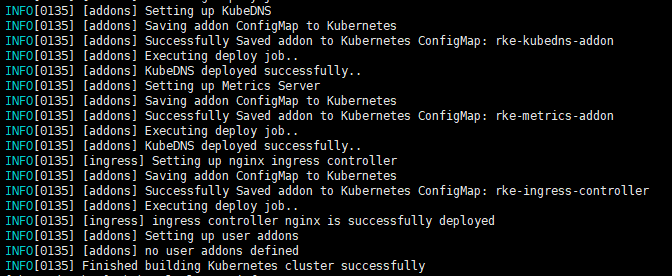

启动rke,部署集群 执行

或

1 rke up -config cluster.yml

出现下图即为成功

移动集群配置文件 运行rke成功部署集群后会生成.kube_config_cluster.yml文件

1 cp kube_config_cluster.yml /home/rke/.kube/config

删除k8s集群 运行

或

1 rke remove -config cluster.yml

清理节点上的docker容器,在每个节点上执行如下命令:

1 docker rm -fv $(docker ps -aq)

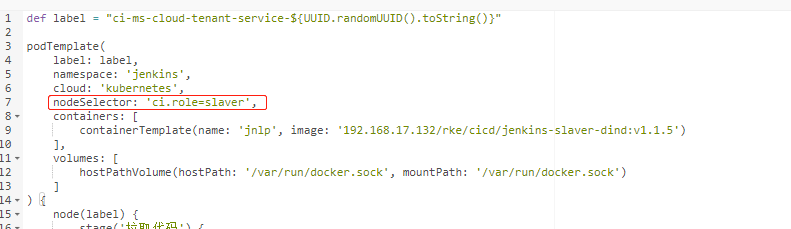

为集群节点创建标签 为节点创建标签

1 kubectl label node 192.168.17.131 ci.role=slave

获取节点信息(带节点的标签)

1 kubectl get node --show-labels

查询带特定标签的节点

1 kubectl get node -a -l " ci.role=slave "

删除一个Label,只需在命令行最后指定Label的key名并与一个减号相连即可

1 kubectl label nodes 192.168.17.131 ci.role-

然后在pipeline中就可以加:nodeSelector: ‘ci.role=slaver’ 语句了

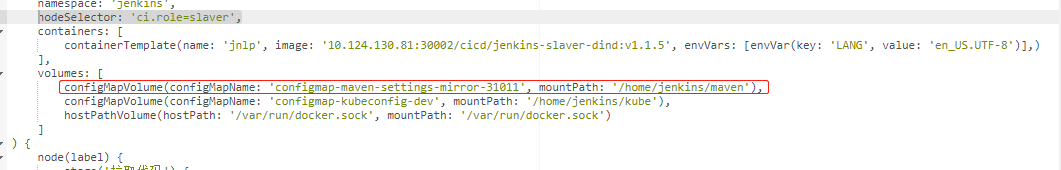

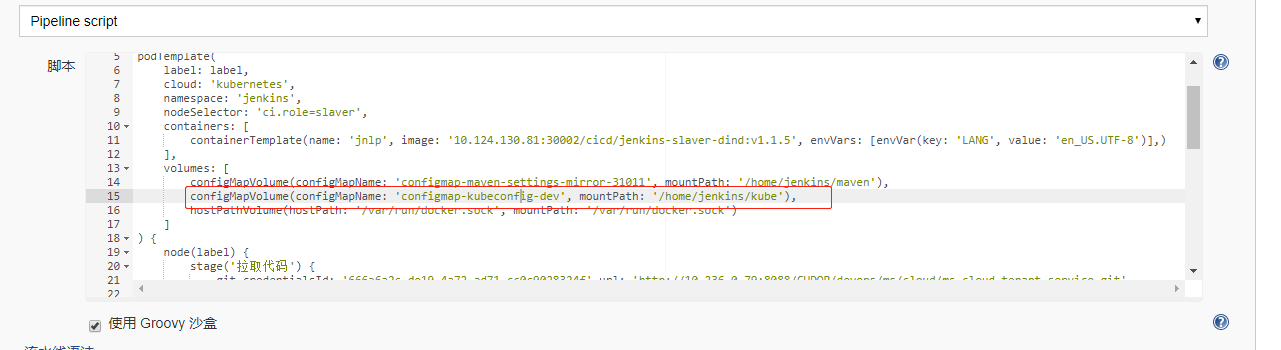

为集群创建maven-setting 创建configmap-setting.yaml文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 apiVersion: v1"1.0" encoding="UTF-8" ?>"http://maven.apache.org/SETTINGS/1.0.0" "http://www.w3.org/2001/XMLSchema-instance" "http://maven.apache.org/SETTINGS/1.0.0 http://maven.apache.org/xsd/settings-1.0.0.xsd" >id >chinaunicom</id>id >maven-snapshot-manager</id>id >maven-release-manager</id>id >chinaunicom</id>for this Mirror.</name>id >maven-snapshot-manager</id>for this Mirror.</name>id >maven-release-manager</id>for this Mirror.</name>

执行命令

1 kubectl create -f configmap-setting.yaml

创建名为configmap-maven-settings-mirror-31010的configMap,然后pipeline中就可以如下配置:

查看configMap

1 kubectl get configmap -n jenkins

为集群创建configMap 创建kubeconfig-dev.yaml文件:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 apiVersion: v1"https://192.168.17.129:6443" "local" "local" "kube-admin-local" "local" "local" users :"kube-admin-local"

执行命令

1 kubectl create -f kubeconfig-dev.yaml

创建名为configmap-kubeconfig-dev的configMap,然后pipeline中就可以如下配置:

调试集群 查看集群部署信息

查看k8s的节点是否工作正常

1 kubectl get nodes -o wide

查看所有命名空间下的deployment

1 kubectl get deployment --all-namespaces

查看所有命名空间下的svc

1 kubectl get svc --all-namespaces

查看所有命名空间下的pod

1 kubectl get pod -o wide --all-namespaces

查看某个pod的运行日志

1 kubectl logs -f kubernetes-dashboard-7f9f8cc4cf-tm2ld -n kube-system

删除某个pod

1 kubectl delete pod ms-cloud-tenant-service-5dc69784b-f42vh -n cloud

查看某个失败的pod的明细

1 kubectl describe pod metrics-server-6c84bc5674-tf4qw -n kube-system

查看镜像描述信息

1 docker inspect 10.124.133.192/devops/jenkins-slaver-dind:v1.0.0

查看某个命名空间下pod的实时状态变化

1 kubectl get pod -o wide -n jenkins -w

查看所有启动的容器(包括失败的)

部署k8s dashboard页面 参考博客:https://www.kubernetes.org.cn/4004.html

部署dashboard (1)新建k8s-dashboard.yaml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 type : Opaque"" ]"secrets" ]"create" ]"" ]"configmaps" ]"create" ]"" ]"secrets" ]"kubernetes-dashboard-key-holder" , "kubernetes-dashboard-certs" ]"get" , "update" , "delete" ]"" ]"configmaps" ]"kubernetes-dashboard-settings" ]"get" , "update" ]"" ]"services" ]"heapster" ]"proxy" ]"" ]"services/proxy" ]"heapster" , "http:heapster:" , "https:heapster:" ]"get" ]

执行

1 kubectl create -f k8s-dashboard.yaml

(2)新建dashboard-usr.yaml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: v1

执行

1 kubectl create -f dashboard-usr.yml

(3)若对yml修改后,更新部署,则执行下述命令

1 kubectl apply -f dashboard-usr.yml

访问dashboard 通过window配置负载 (1)安装kubectl

1 curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.7.0/bin/windows/amd64/kubectl.exe

(2) 拷贝集群kube_config_cluster.ym文件到本地config.yml在cmd执行以下命令

1 kubectl --kubeconfig=C:\Users\Administrator\Desktop\config.yml proxy

(3)web访问

1 http://localhost:8001/api/v1/namespaces/kube-system/services/https:kubernetes-dashboard:/proxy/

(4) 生成web访问的token

1 2 kubectl -n kube-system describe secret $(kubectl -n kube-system get secret |'{print $1}' )

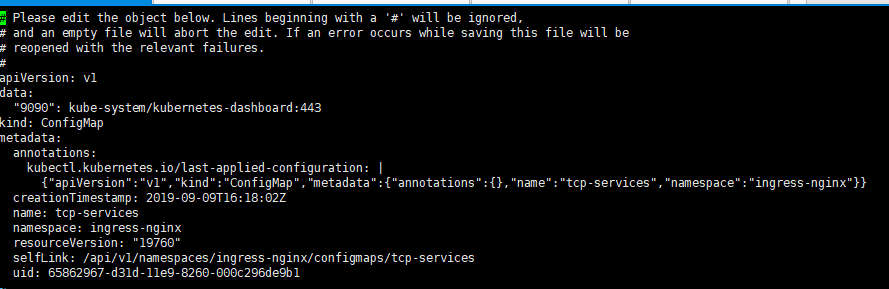

通过configMap配置负载 (1)编辑configmap文件

1 kubectl edit configmap tcp-services -n ingress-nginx

添加以下两行

1 2 data:"9090" : kube-system/kubernetes-dashboard:443

1 kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}' )

(3)第一次部署报错的情况下,删除token

1 kubectl --kubeconfig ../kube_config_cluster.yml delete secret kubernetes-dashboard-key-holder -n kube-system

访问dashboard地址:https://192.168.17.131:9090

部署k8s dashboard页面图形化数据 上传heapster-grafana.yaml heapster.yaml influxdb.yaml文件,创建

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 apiVersion: apps/v1true env :"3000" "false" "true" 'true' type : NodePort

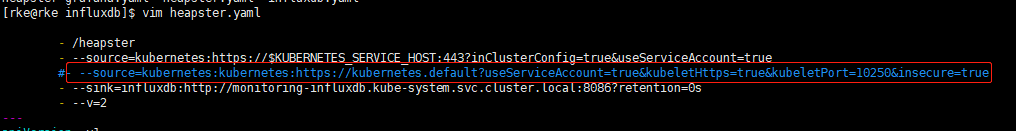

(2)创建heapster.yaml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 apiVersion: v1command :source =kubernetes:kubernetes:https://kubernetes.default?useServiceAccount=true &kubeletHttps=true &kubeletPort=10250&insecure=true 'true'

(3)创建influxdb.yaml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 apiVersion: apps/v1'true' type : NodePort

(4)创建各个pod

1 2 3 kubectl create -f heapster-grafana.yaml

–source : 指定连接的集群。

–sink : 指定后端数据存储。这里指定influxdb数据库。

第二章 自动化构建部署流程搭建 部署jenkins-master (1)下载镜像jenkins-masterhttps://pan.baidu.com/s/1lhllaOIsvDXJoiFMPx47Qw 提取码: z24phttps://pan.baidu.com/s/1hROj7nt_iw0Vf1tQTShfYA 提取码: iieu

(2)在192.168.17.131 上建目录

(3)赋予权限

1 chown -R 1000:1000 /jenkins-data

(4)创建jenkins-k8s-resources.yml文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 apiVersion: apps/v1beta1env :type : DirectoryOrCreatetype : NodePort"" ]"pods" ]"create" ,"delete" ,"get" ,"list" ,"patch" ,"update" ,"watch" ]"" ]"pods/exec" ]"create" ,"delete" ,"get" ,"list" ,"patch" ,"update" ,"watch" ]"" ]"pods/log" ]"get" ,"list" ,"watch" ]"" ]"secrets" ]"get" ]

(5)启动Jenkins-master

1 kubectl create -f jenkins-k8s-resources.yml

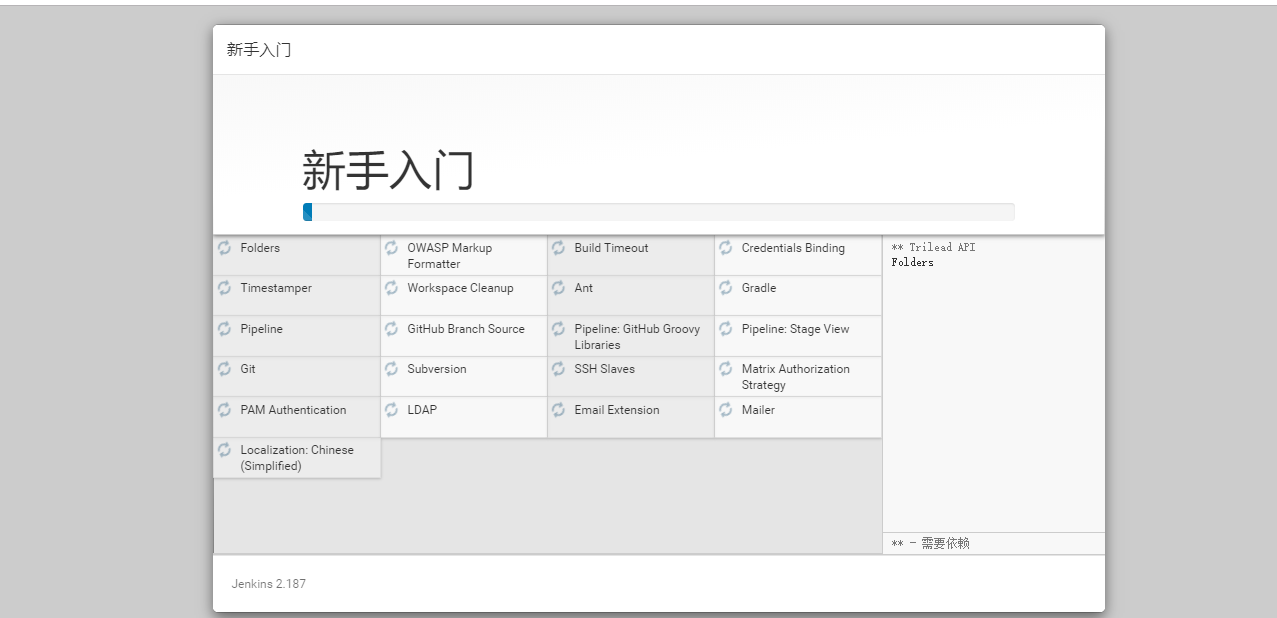

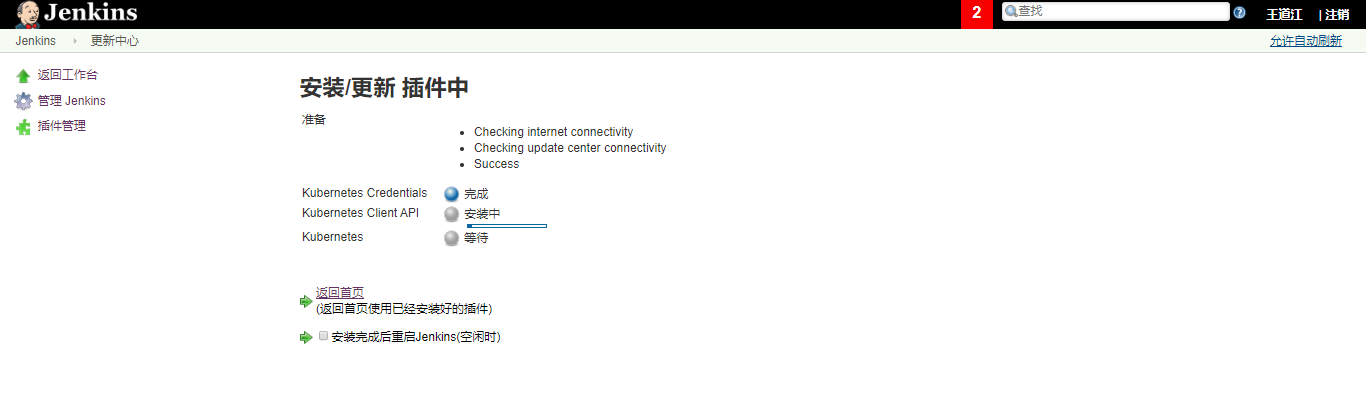

(6)在jenkins中安装kubernetes插件

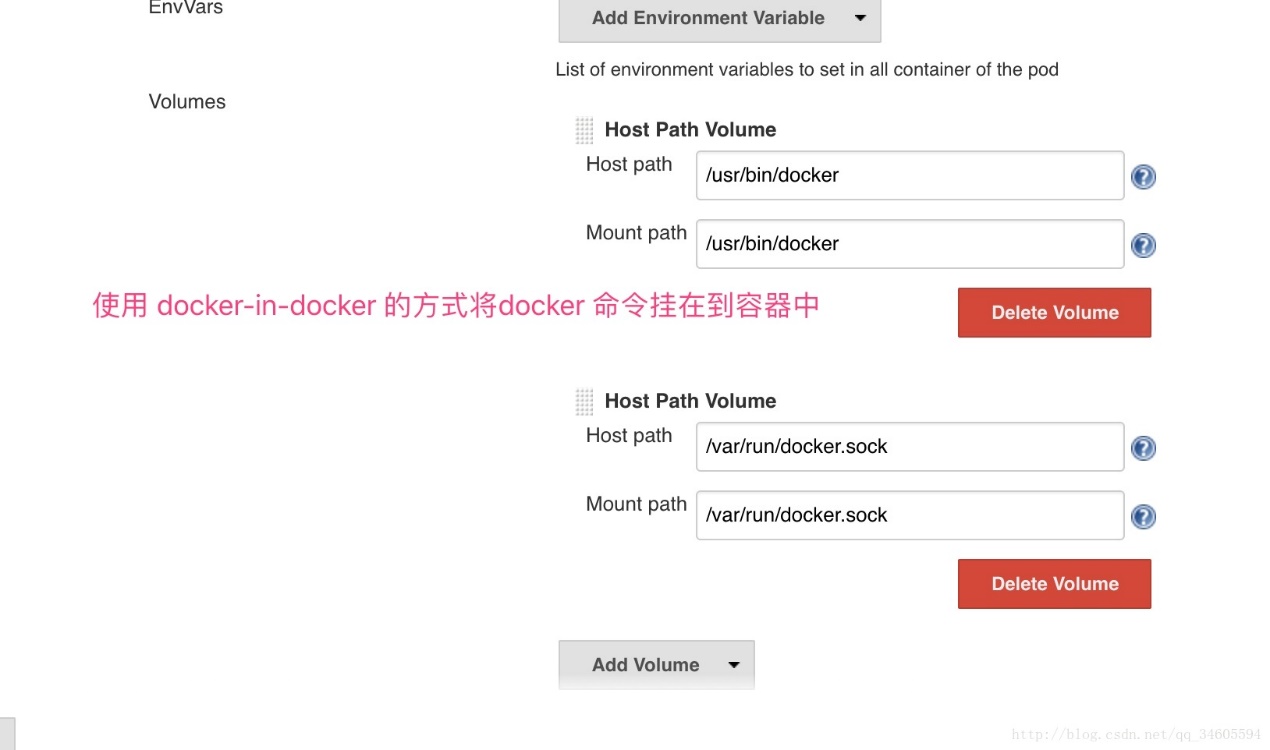

部署jenkins-slave 构建jenkins-slave基础镜像 基于openshift/jenkins-slave-base-centos7:latest所构建的包含了maven, nodejs,helm, go, beego等常用构建工具

下载链接: https://pan.baidu.com/s/1Wvs1pRotGWqTT_6BLoRrTg 提取码: xvde

(2)编辑Dockerfile文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 FROM openshift/jenkins-slave-base-centos7:latest"基于`openshift/jenkins-slave-base-centos7:latest`所构建的包含了maven, nodejs, helm, go, beego等常用构建工具" $JAVA_HOME /bin:$PATH chown root:root /usr/java/jdk1.8.0_181 -R \chmod 755 /usr/java/jdk1.8.0_181 -Rchown root:root /opt/apache-maven-3.6.0 -R \chmod 755 /opt/apache-maven-3.6.0 -R \ln -s /opt/apache-maven-3.6.0/ /opt/maven$M2_HOME /bin:$PATH 'N;146 a \ \ \ \ <mirror>\n\ \ \ \ \ \ <id>self-maven</id>\n\ \ \ \ \ \ <mirrorOf>self-maven</mirrorOf>\n\ \ \ \ \ \ <name>self-maven</name>\n\ \ \ \ \ \ <url>http://10.236.5.18:8088/repository/maven-public/</url>\n\ \ \ \ </mirror>' /opt/maven/conf/settings.xmlchown root:root /opt/node-v10.15.3-linux-x64 -R \chmod 755 /opt/node-v10.15.3-linux-x64 -R \ln -s /opt/node-v10.15.3-linux-x64/ /opt/node$PATH :$NODE_HOME /bin$PATH :$HELM_HOME /linux-amd64$PATH :$GOROOT /bin:$GOPATH /bin

(2)执行构建命令

1 docker build -t jenkins-slave:2019-09-11-v1 .

注意最后有个点,代表使用当前路径的 Dockerfile 进行构建

生成的镜像下载链接: https://pan.baidu.com/s/1Wvs1pRotGWqTT_6BLoRrTg 提取码: xvde

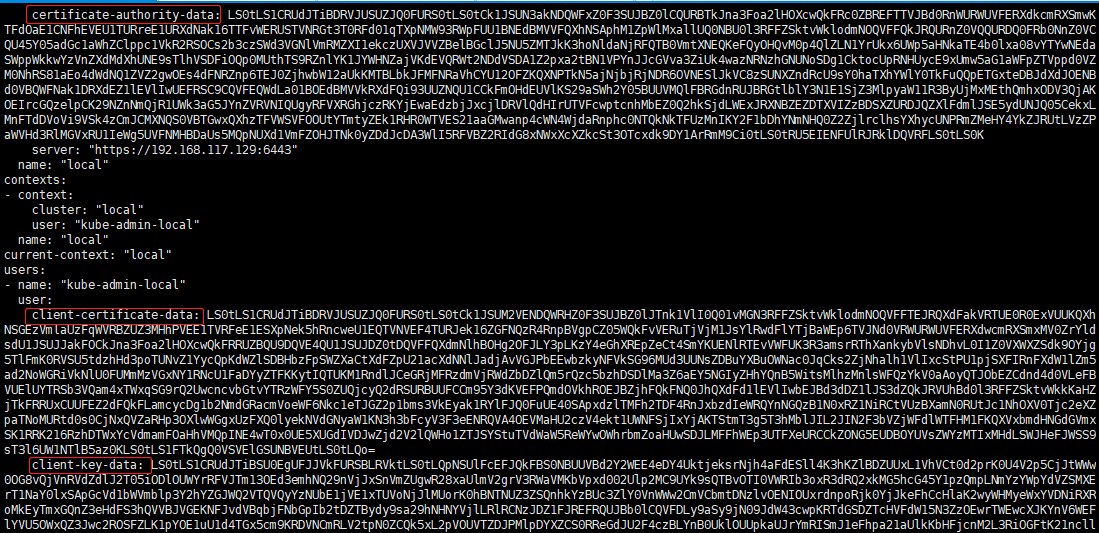

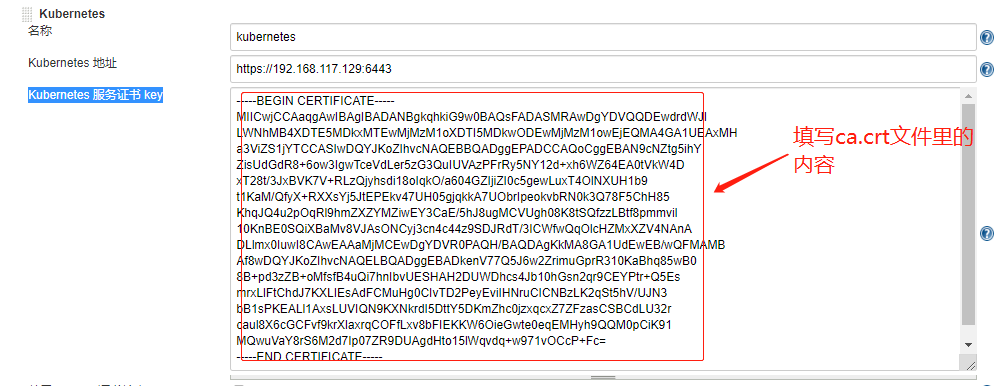

生成连接k8s集群的客户端证书 1)生成连接api-server的服务证书 key并进行连接测试

1 cat kube_config_cluster.yml

分别执行

1 2 3 4 5 echo certificate-authority-data | base64 -d > ca.crtecho client-certificate-data | base64 -d > client.crtecho client-key-data | base64 -d > client.key

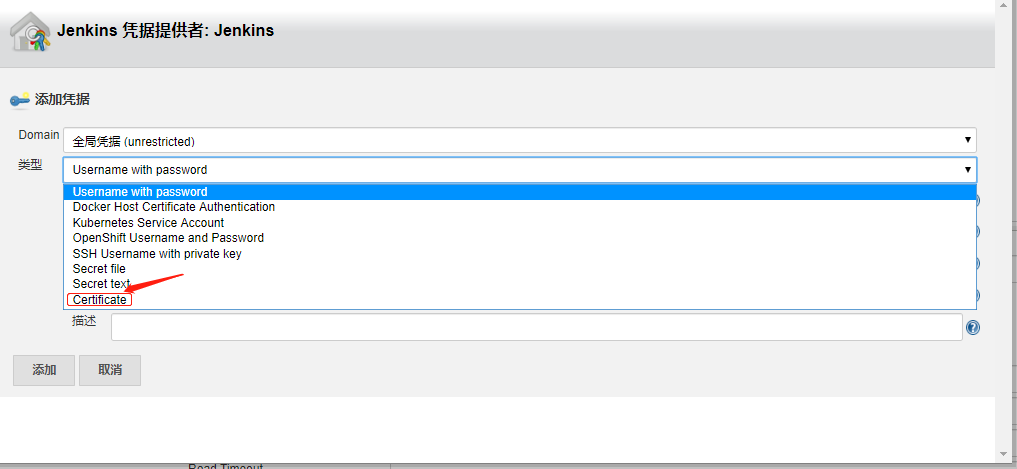

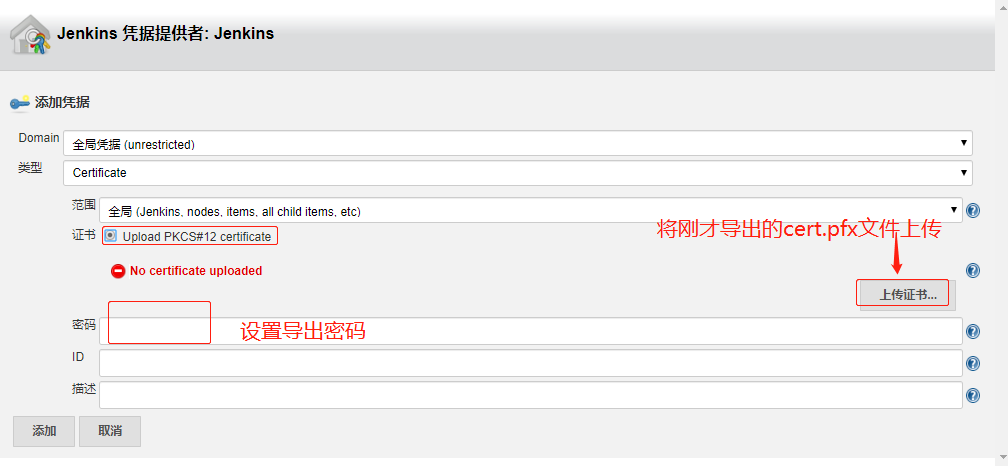

然后根据如上内容生成客户端认证的证书cert.pfx

1 openssl pkcs12 -export -out cert.pfx -inkey client.key -in client.crt -certfile ca.crt

参考http://www.mamicode.com/info-detail-2399348.html

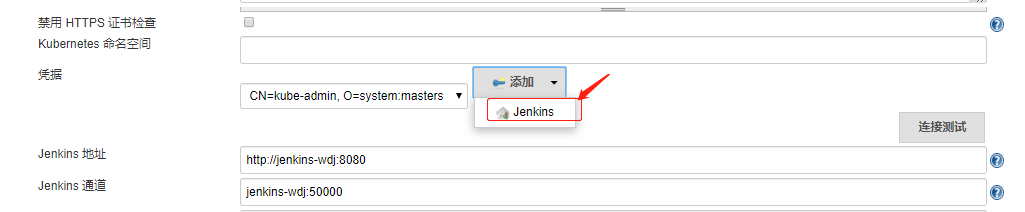

配置jenkins-master(参考) 打开 jenkins UI 界面依次点击

配置完成后点击保存

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 kubectl create serviceaccount jenkins-wdj -n jenkins".data.token" } | base64 -d".data.token" } | base64 -d

https://www.iteye.com/blog/m635674608-2361440

配置jenkins-master(选用) (1)新建启动集群时生成的kube_config_cluster.yml文件;

(2)系统管理-》插件管理-》可选插件-》搜索kubernetes插件-》直接安装;

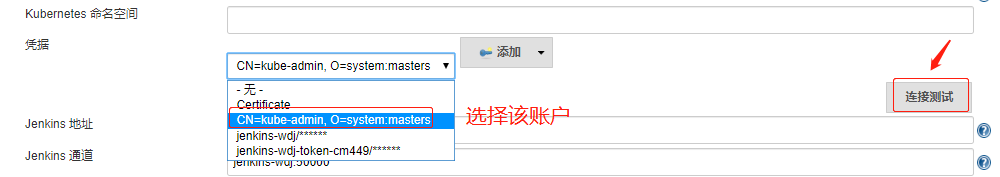

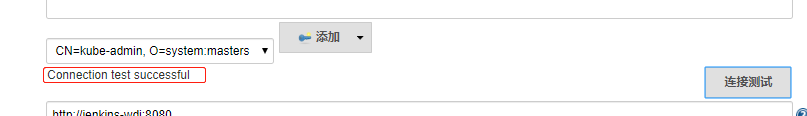

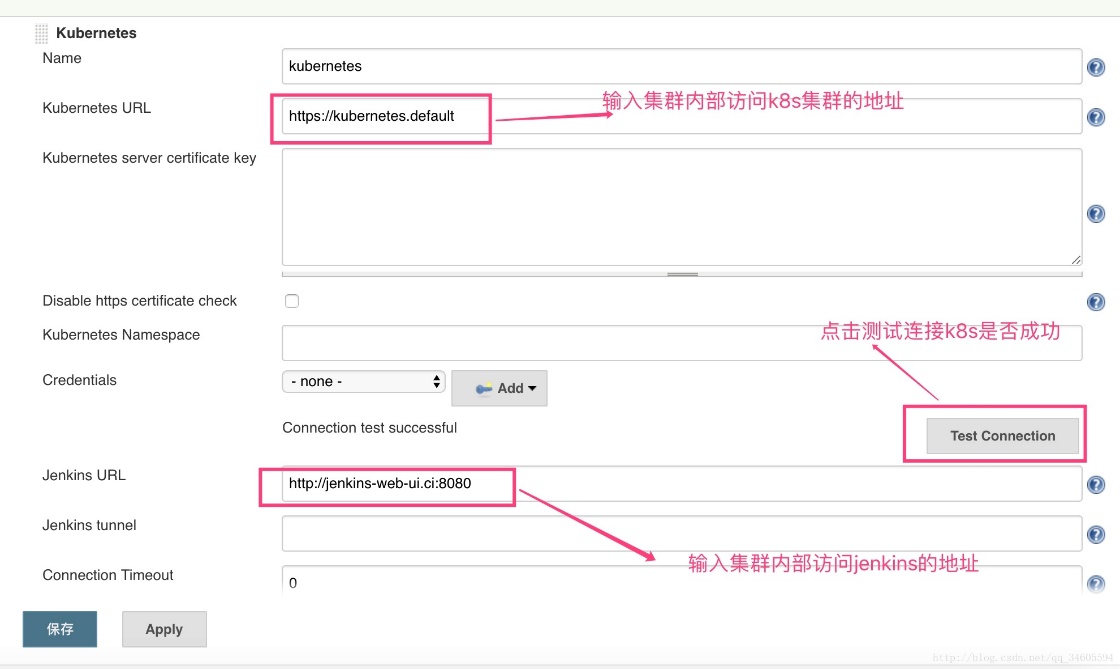

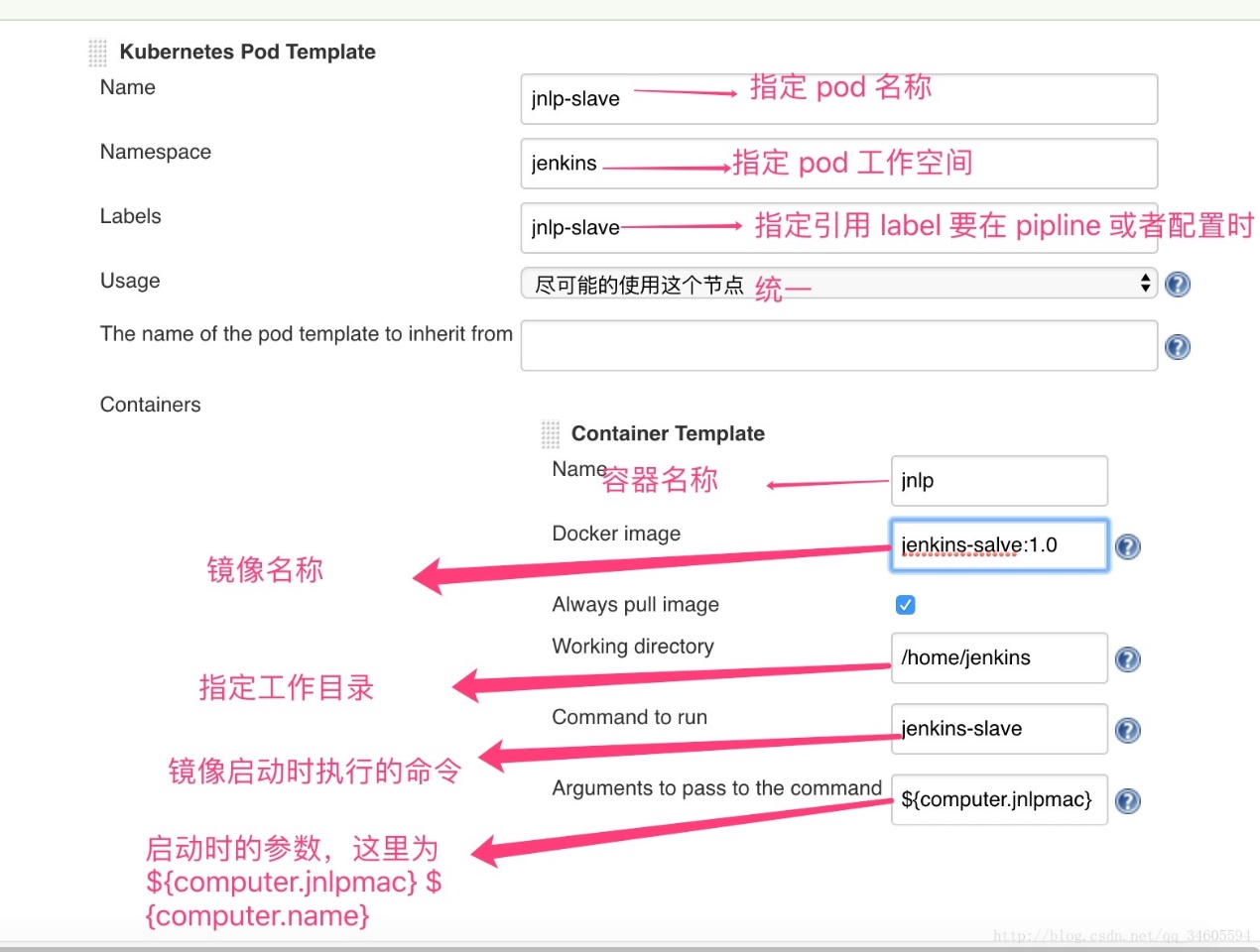

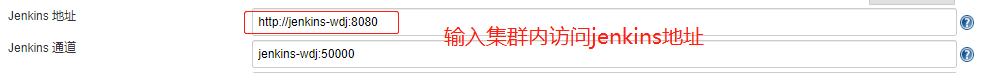

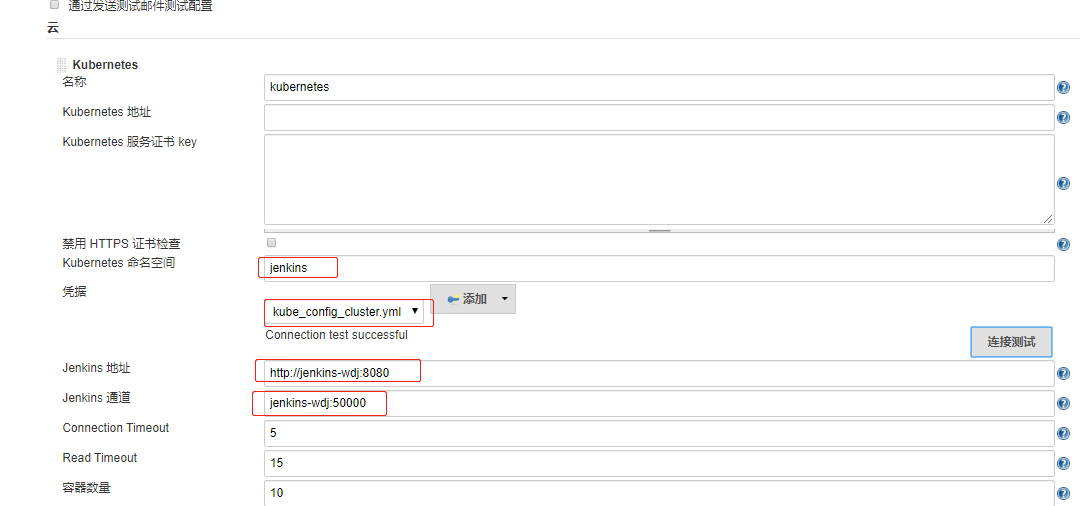

(3)系统管理-》系统设置-》新增一个云,配置如下:

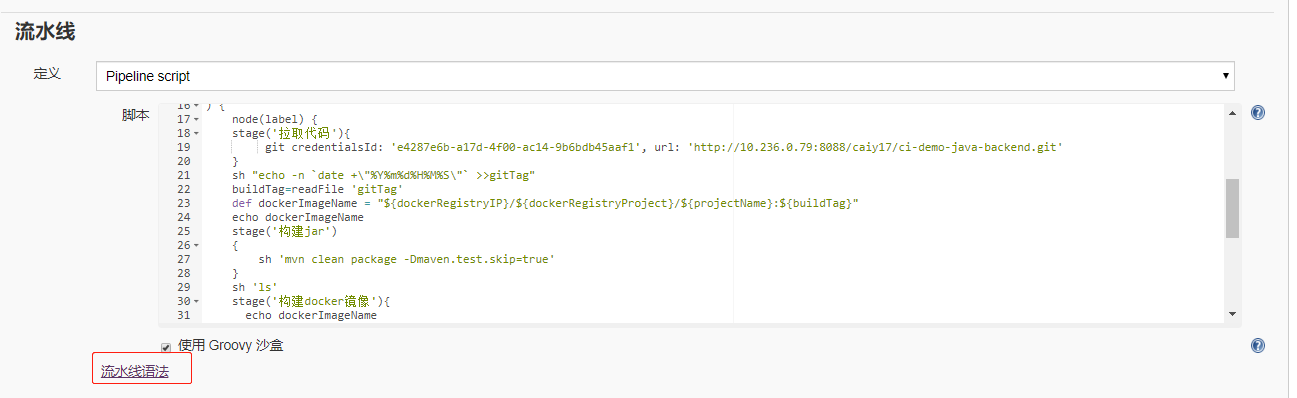

新建pipeline流水线任务 demo1 (1)新建一个测试pipeline任务demo;

(2) 脚本

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 def name = "ci-demo-backend" "${name} -${UUID.randomUUID().toString()} " 'jenkins' ,'kubernetes' ,'jnlp' , image: '192.168.17.132/rke/cicd/jenkins-slaver-dind:v1.1.5' )'/var/run/docker.sock' , mountPath: '/var/run/docker.sock' )'test' ) {echo "hello, world" sleep 60

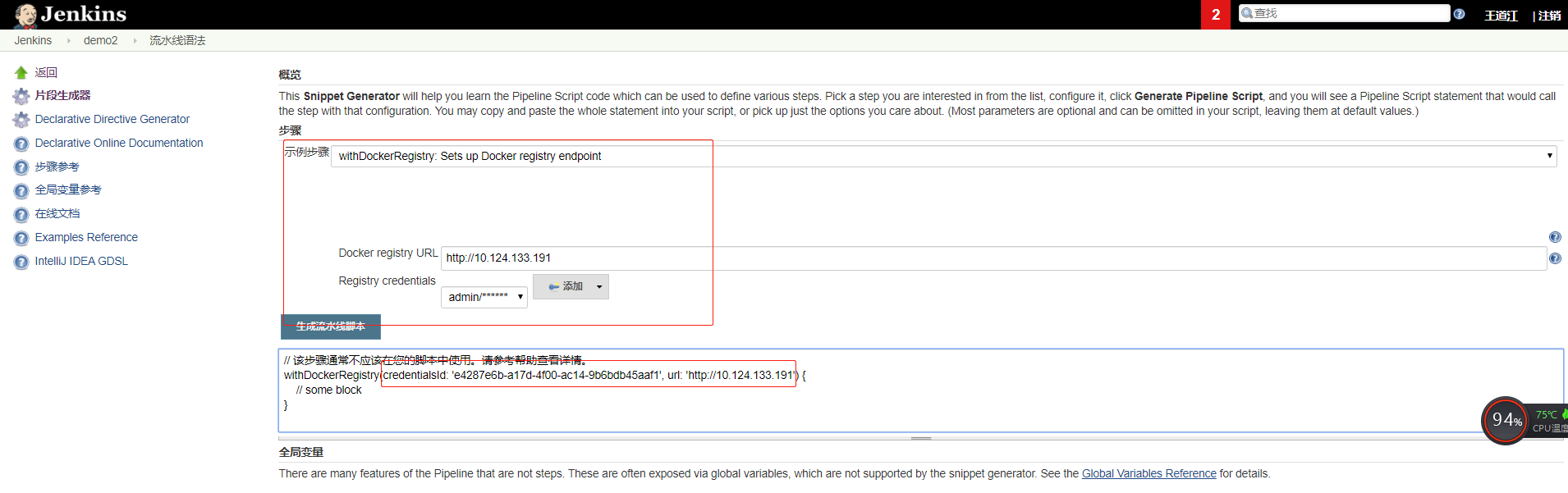

demo2 (1)K8s集群中设置harbor仓库认证(参考)

使用kubectl 命令生成secret(不同的 namespace 要分别生成secret ,不共用*)*1 2 3 4 5 6 7 8 9 10 11 kubectl create secret docker-registry harborsecret \

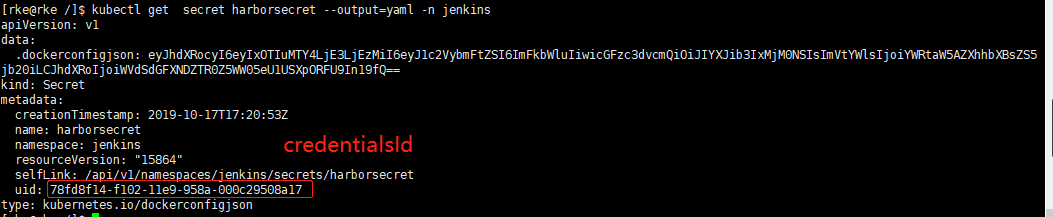

查看此secret的配置内容

kubectl get secret harborsecret –output=yaml -n jenkins

(2)添加gitlab和harbor仓库的认证(选用)

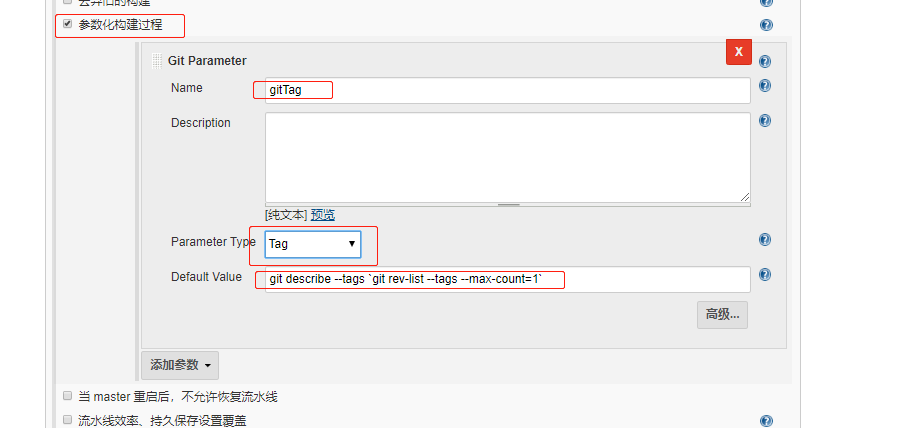

(2)下载Git Parameter插件

(3)设置参数化构建过程

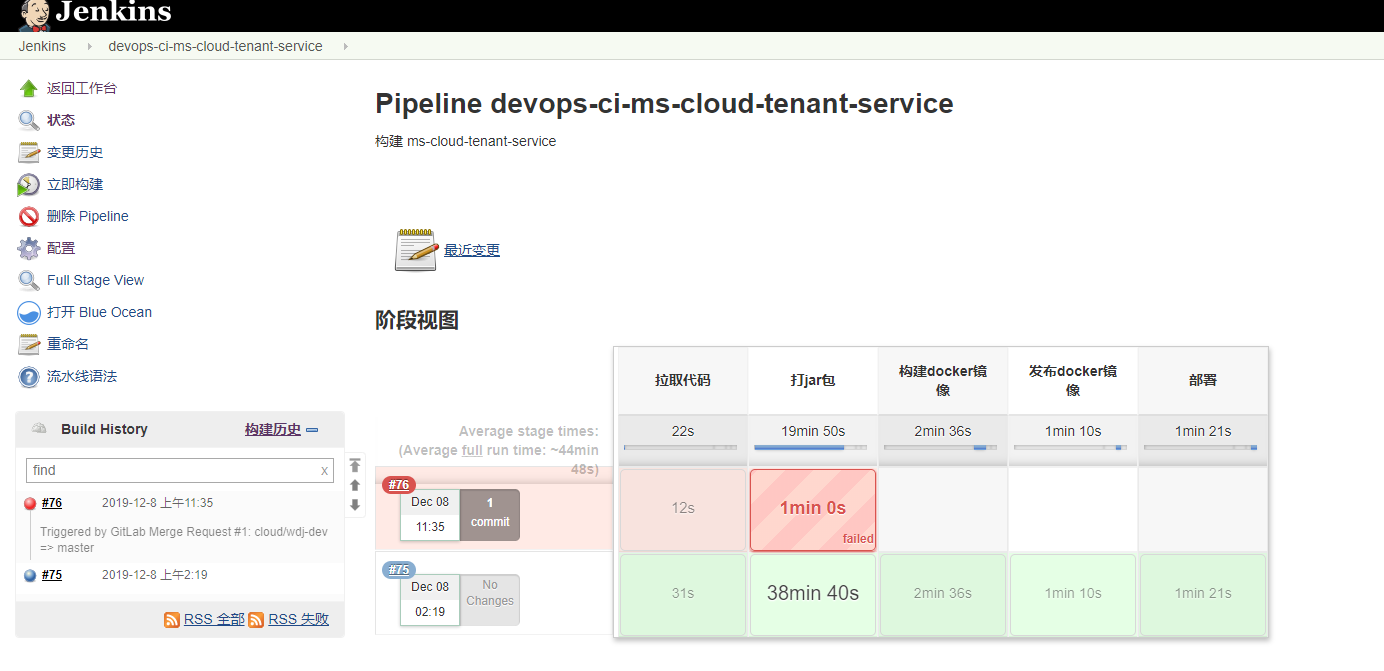

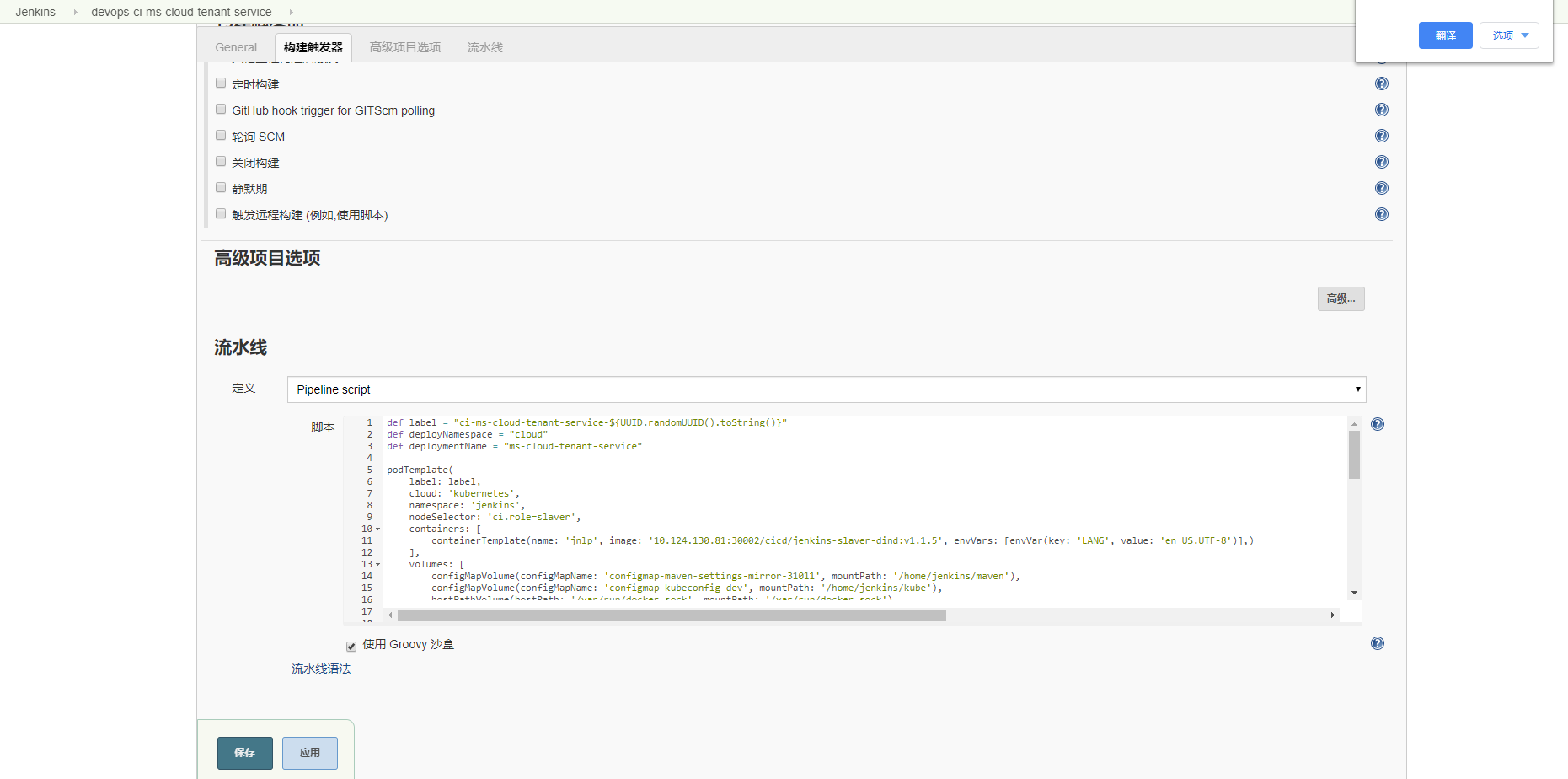

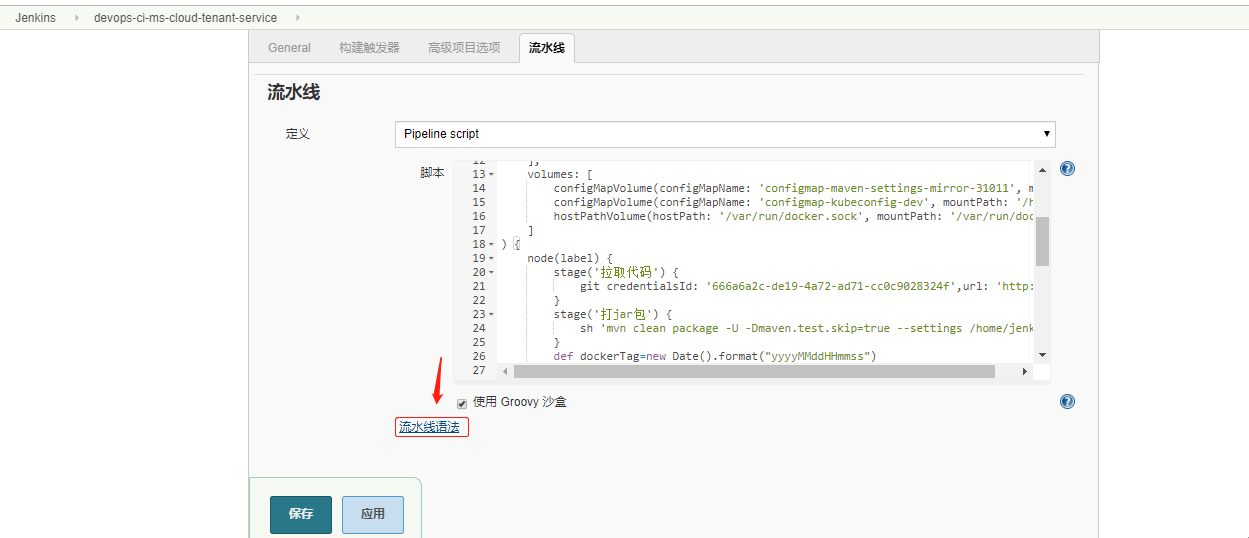

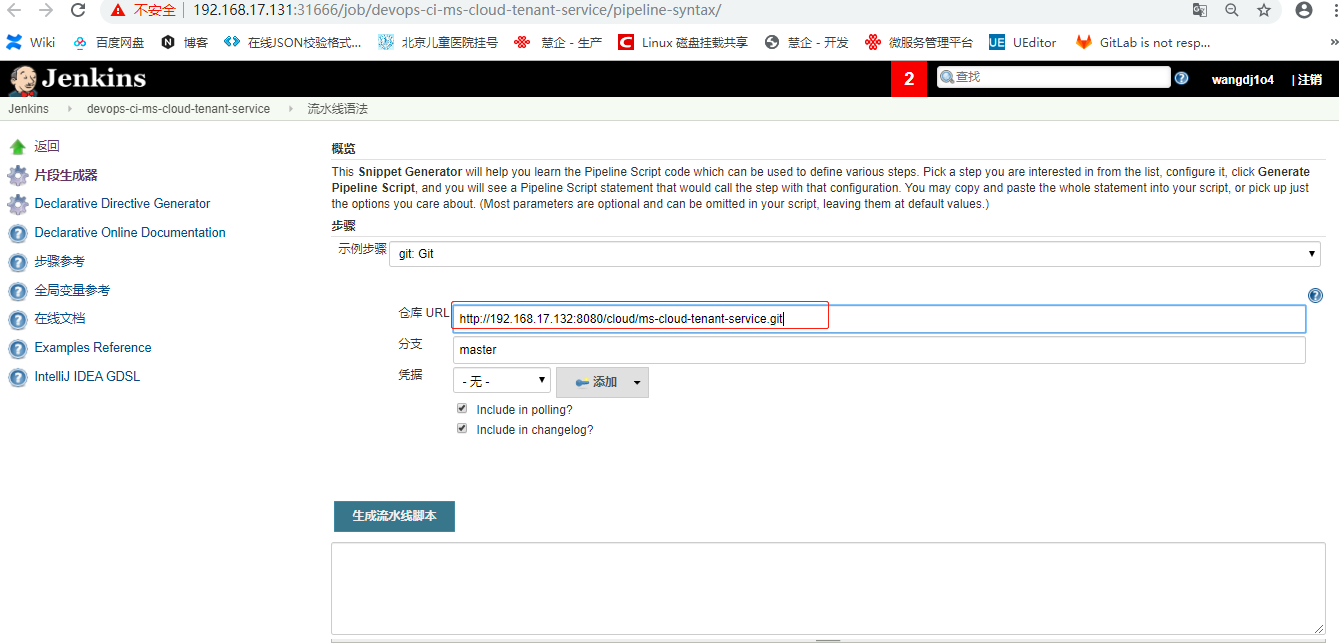

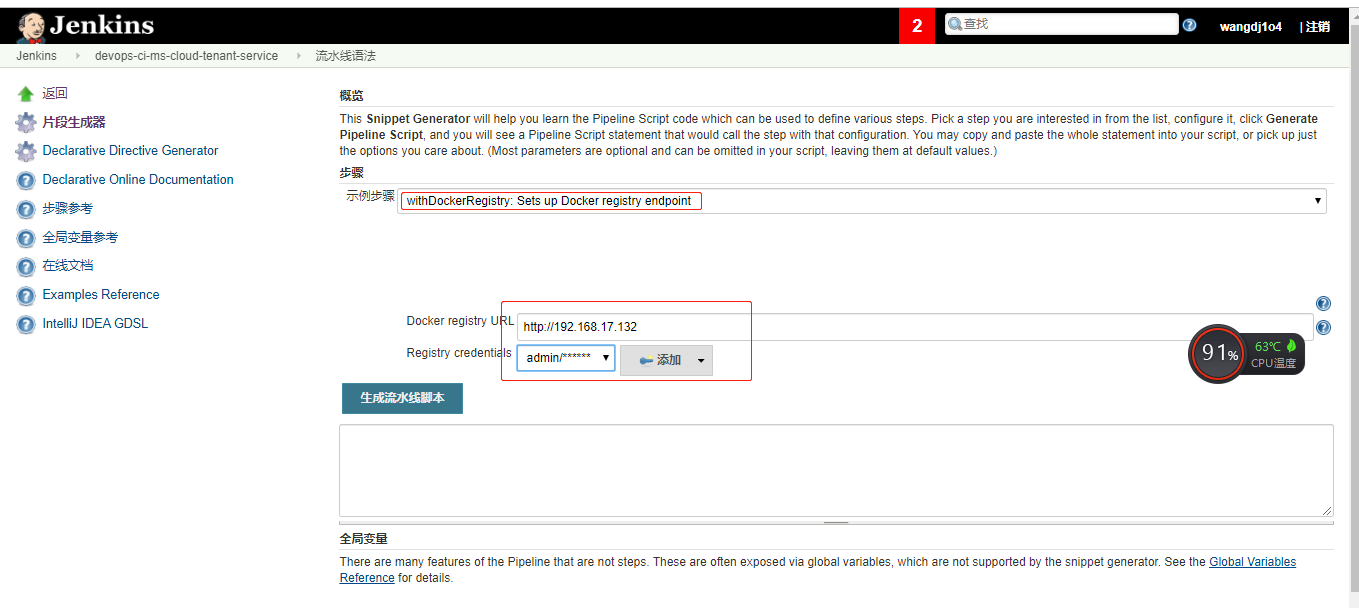

demo3 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 def label = "ci-ms-cloud-tenant-service-${UUID.randomUUID().toString()} " "cloud" "ms-cloud-tenant-service" 'kubernetes' ,'jenkins' ,'jnlp' , image: '192.168.17.132/rke/cicd/jenkins-slaver-dind:v1.1.5' , envVars: [envVar(key: 'LANG' , value: 'en_US.UTF-8' )],)'configmap-maven-setting-wdj' , mountPath: '/home/jenkins/maven' ),'configmap-kubeconfig-dev' , mountPath: '/home/jenkins/kube' ),'/var/run/docker.sock' , mountPath: '/var/run/docker.sock' )'拉取代码' ) {'ab716d57-9399-4978-bfb4-82eaccaea9d2' , url: 'http://192.168.17.132:8080/cloud/ms-cloud-tenant-service.git' '打jar包' ) {'mvn clean package -U -Dmaven.test.skip=true --settings /home/jenkins/maven/settings.xml' "yyyyMMddHHmmss" )"192.168.17.132/devops/ms-cloud-tenant-service:v${dockerTag} " '构建docker镜像' ) {pwd ()"docker build -f docker/Dockerfile --tag=\"${dockerImageName} \" ." echo "===> finish build docker image: ${dockerImageName} " 'docker images' '发布docker镜像' ) {'1049ee9b-99fd-42df-8c40-a818fe66ae5a' , url: 'http://192.168.17.132/' ){"docker push ${dockerImageName} " "docker rmi ${dockerImageName} " 'docker images' '部署' ) {"kubectl --kubeconfig=/home/jenkins/kube/config -n ${deployNamespace} patch deployment ${deploymentName} -p '{\"spec\": {\"template\": {\"spec\": {\"containers\": [{\"name\":\"${deploymentName} \", \"image\":\"${dockerImageName} \"}]}}}}'" echo "==> deploy ${dockerImageName} successfully"

参考文档https://www.cnblogs.com/miaocunf/p/11694943.html

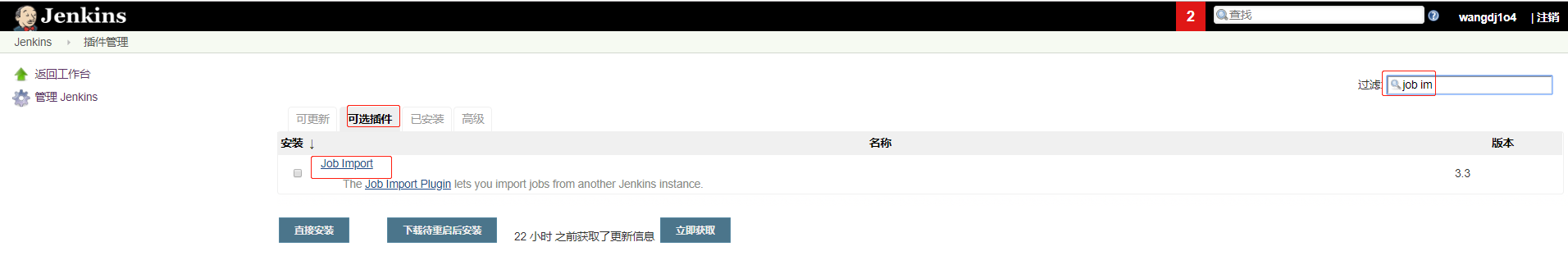

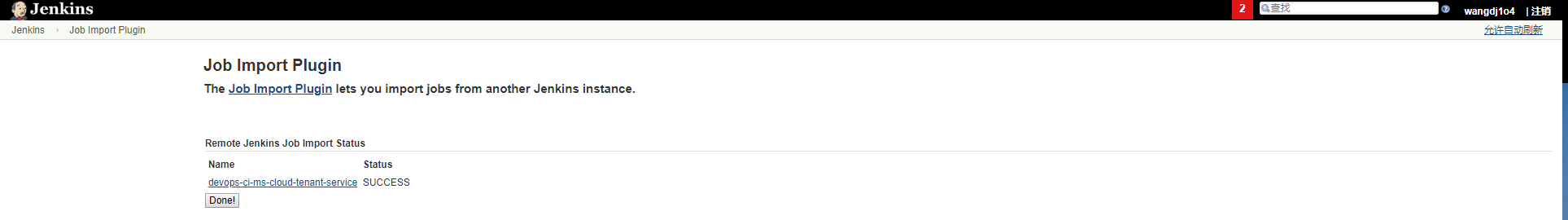

jenkins job迁移 Job Import Plugin导入 (http://10.244.6.41:31000 到本机192.168.17.131:31666)

(1)首先到新的Jenkins上,在插件管理里先安装下Job Import Plugin,如下所示:

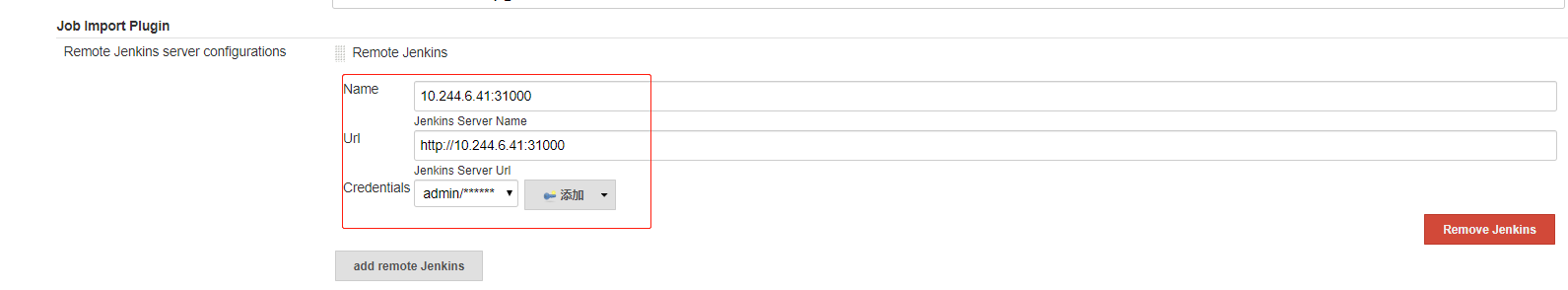

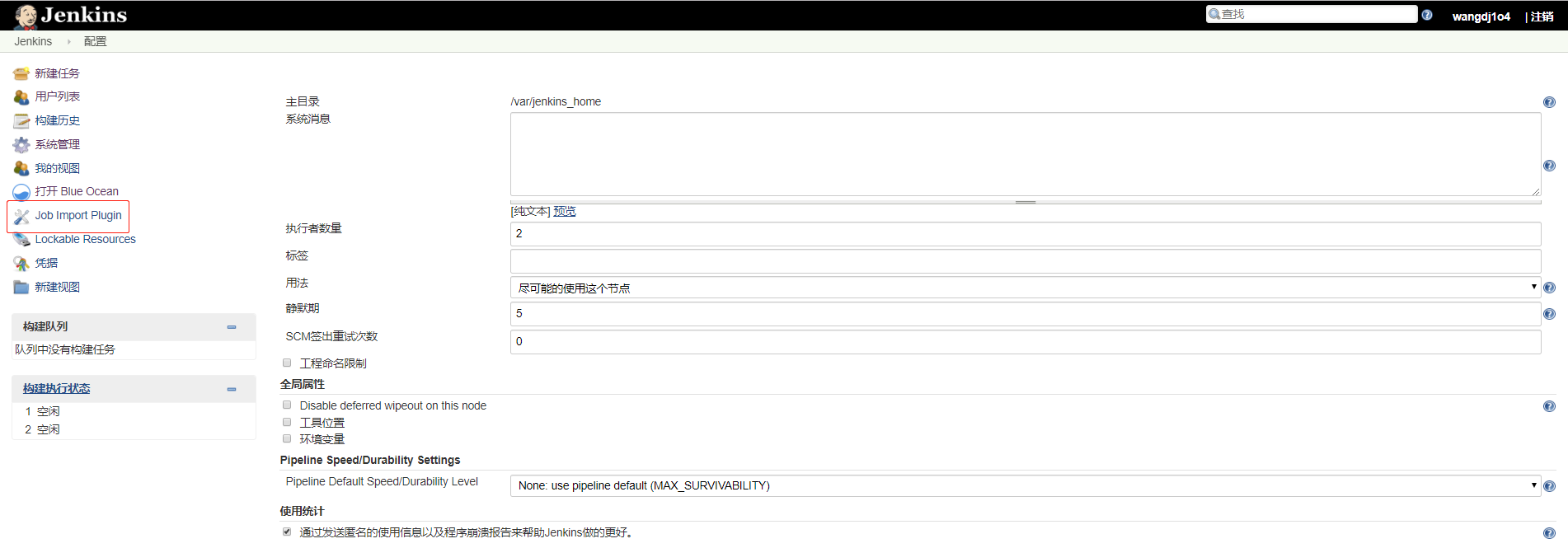

(2)安装完后进入“Manage Jenkins” -> “Configure System”下,找到Job Import Pluguin配置的地方,进行如下设置:

name : 这个可以任意命名,这里我命名成要拷贝的Jenkins的IPUrl : 指要从哪里拷贝的Jenkins的URL,现在我们要从192.168.9.10拷贝job,因此这里要设置成192.168.9.10的Jenkins的URLCredentials :需要添加一个旧Jenkins的账号(也就是192.168.9.10的账号),没有添加的时候点击Add手动添加下,就可以像上面的截图一样下拉选择到这个账号了

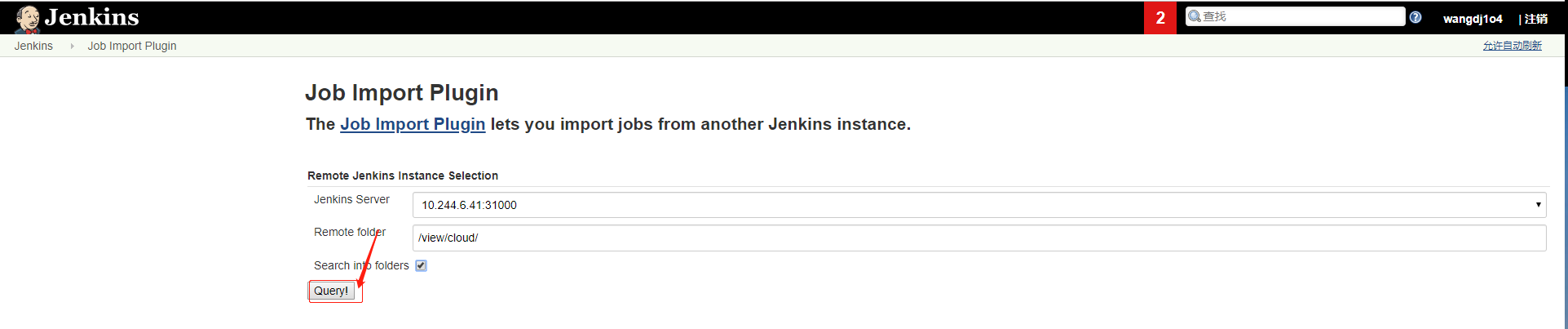

(3)设置完后点击保存下,回到Jenkins首页点击Job Import Plugin就可以进行Job的迁移了,如下所示:

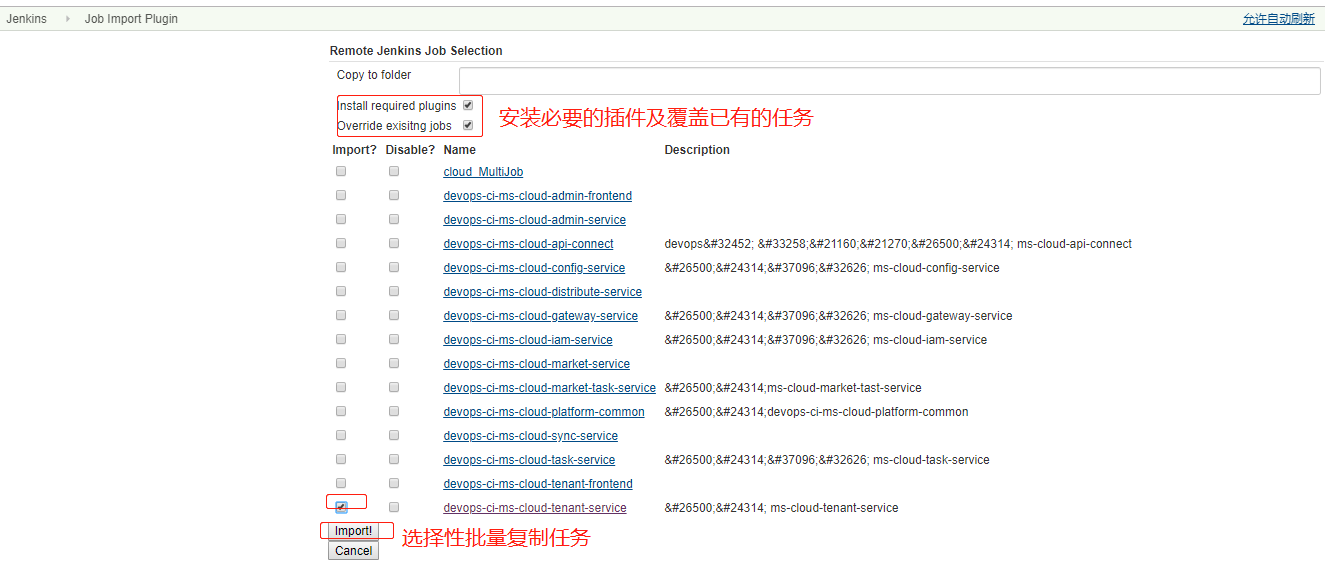

在Job Import Plugin界面,下拉选择刚才添加的配置,然后点击Query按钮就可以搜索出配置的Jenkins下的job了,然后选择需要的job进行迁移导入即可:

(4)因为有时候旧的Jenkins上的插件新Jenkins上未必有,因此可以根据实际情况勾选是否需要安装必要的插件,如上面的截图所示,需不需要覆盖已有的job也根据实际情况勾选下。导入成功会有如下的提示:

(5)有了上面的提示后就可以会到新的Jenkins的首页,查看Job有没有成功进入,并进入导入的job查看设置有没有成功的复制过来,如下所示:

可以看到job及其设置成功的被导入到新的job了。

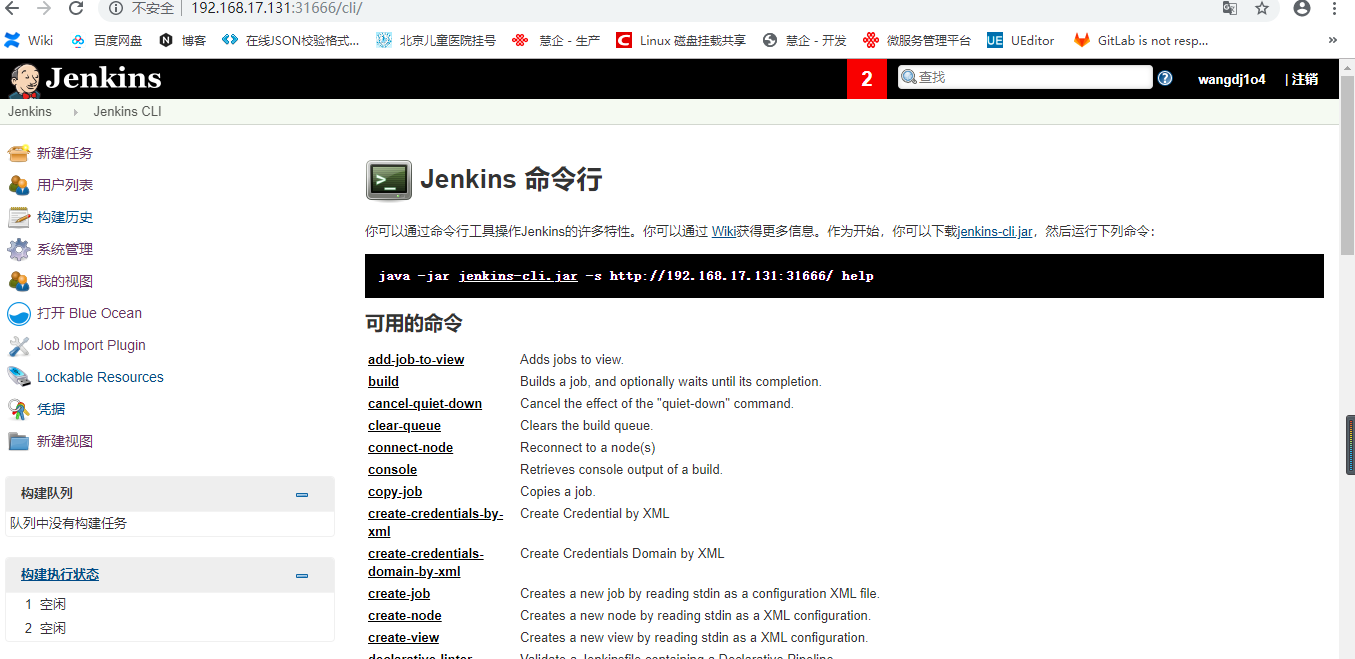

Jenkins CLI方式导入 有时候在公司内部Jenkins部署到不同的网段里,不同网段间可能会限制无法相互访问,这种情况下通过Job Import Plugin进行job导入的方式就行不通了,这时候可以通过Jenkins CLI方式进行job配置导出,然后新Jenkins在根据导出的配置进行再导入操作,完成job的配置迁移。下面我们来具体讲解下。

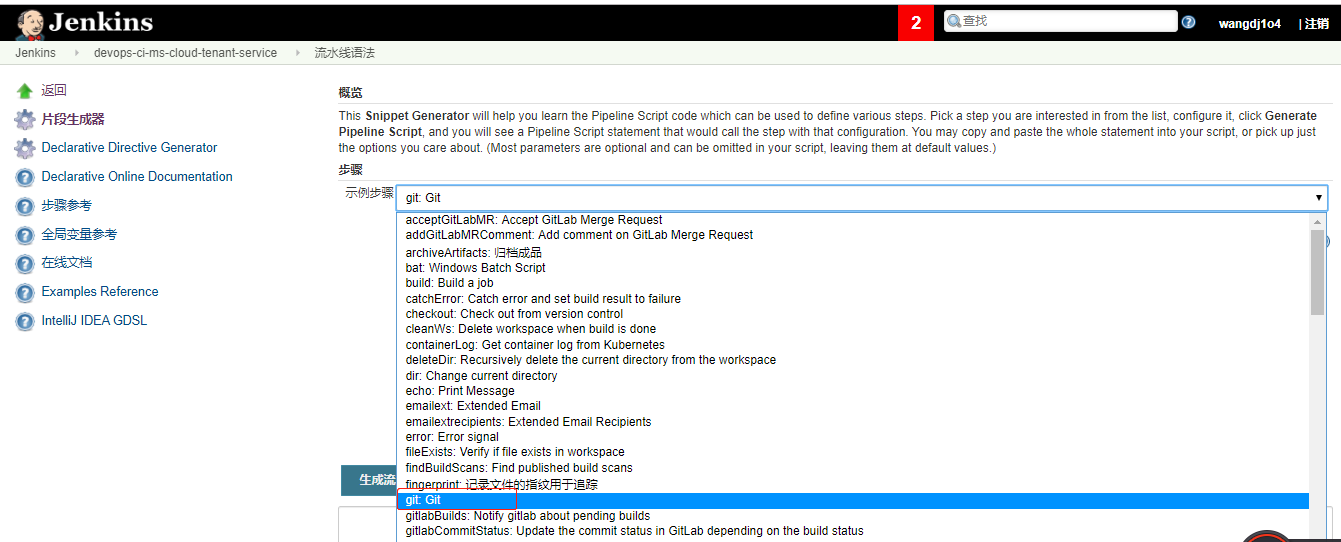

点击进入Jenkins CLI,可以看到Jenkins命令行接口提供很多命令可以用来进行Jenkins的相关操作,可以看到有提供了get-job这样一个命令,这个命令可以将job的定义导出到xml的格式到输出流,这样我们可以通过这个命令将旧Jenkins上的job导出到外部文件,然后还可以看到有另外一个命令create-job,这个命令可以根据已有的xml配置文件进行job创建,那我们可以根据从旧job导出的job配置文件做为输入进行job的创建了。

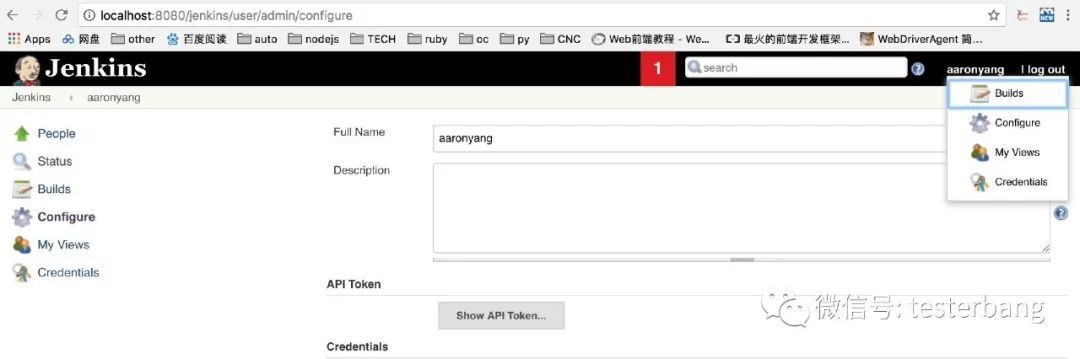

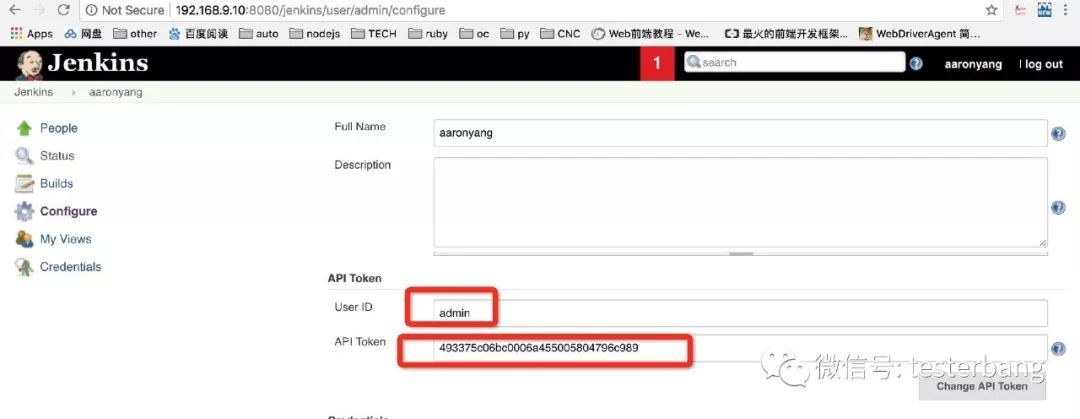

(2)接着点击下Jenkins右上角的账号,选择Configure,然后点击Show API Token,拷贝token,这个token可以用来进行配置导出的时候做为认证使用

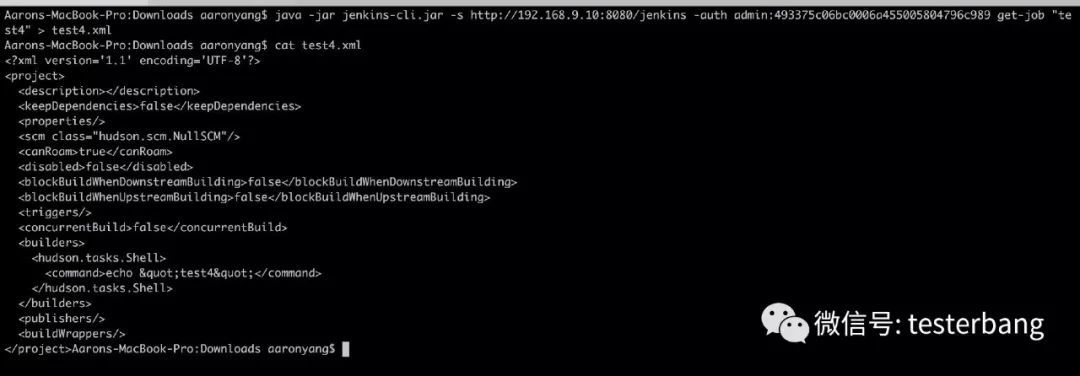

(3)在jenkins-cli.jar下载的根目录下执行如下命令进行job导出,这里我新建了个job,命名为test4,现在执行下如下命令进行test4这个job配置的导出:

1 2 java -jar jenkins-cli.jar -s http://192.168.9.10:8080/jenkins -auth"test4" > test4.xml

http://192.168.9.10:8080/jenkins

admin: 上面截图获取Show API Token下的User ID

493375c06bc0006a455005804796c989: 上面截图获取API Token的值

test4: 需要导出配置的job名

test4.xml: 导出的文件的名称,可任意

根据实际情况替换下上面的四个值即可执行完上面的命令就可以看到test4.xml文件生成了

(4)接着在新的Jenkins下同样先下载下jenkins-cli.jar,然后将上面生成的test4.xml拷贝到新的Jenkins机器下,同样获取下新Jenkins登录账号的API Token和User ID,执行下如下命令就可以进行job导入了

1 2 java -jar jenkins-cli.jar -s http://192.168.9.8:8080/jenkins -auth"test4" < test4.xml

记得将URL替换成新Jenkins的URL,User ID和token也替换下上面的命令执行完后,就可以看到在新的Jenkins下新job被成功导入了

参考自:https://cloud.tencent.com/developer/article/1470433

Job 批量删除 点击系统管理-》脚本命令行-》脚本命令行-》输入代码,点击运行

1 2 3 4 5 6 7 8 9 10 11 12 13 def jobName = "devops-ci-ms-cloud-tenant-service"

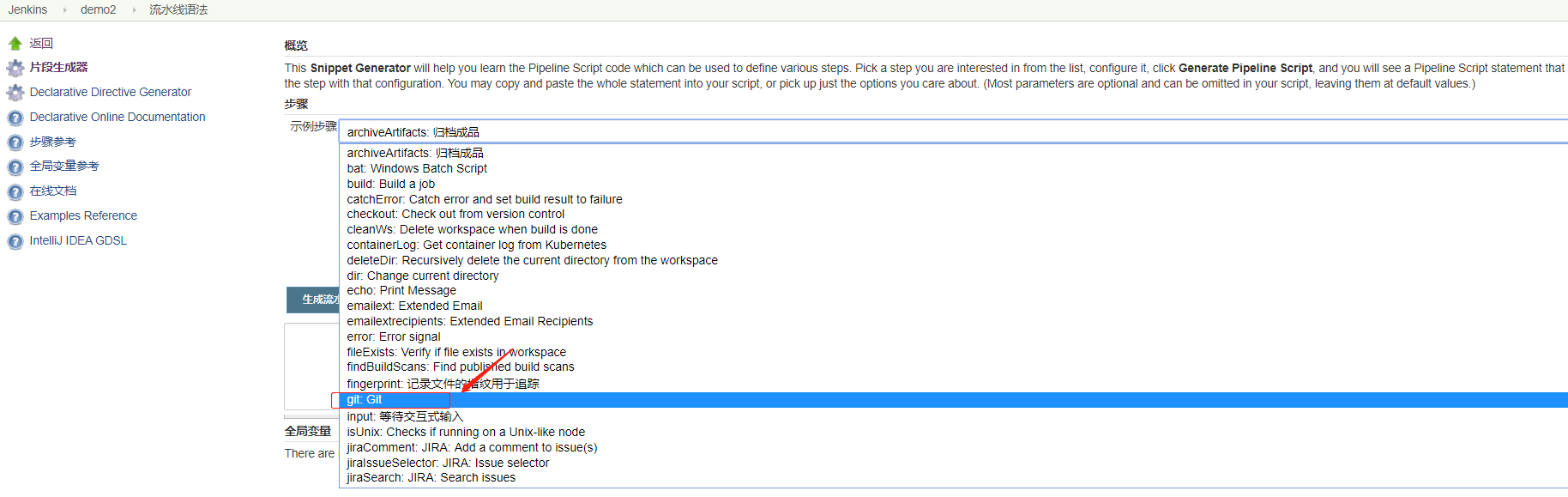

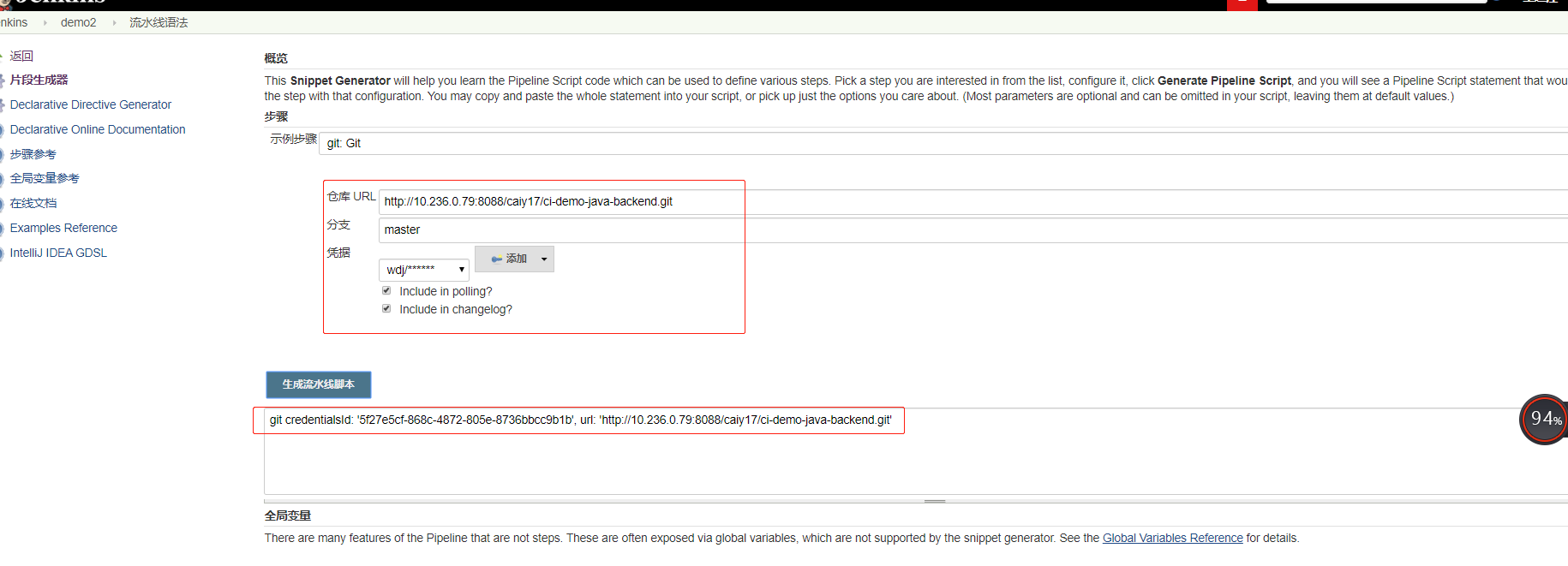

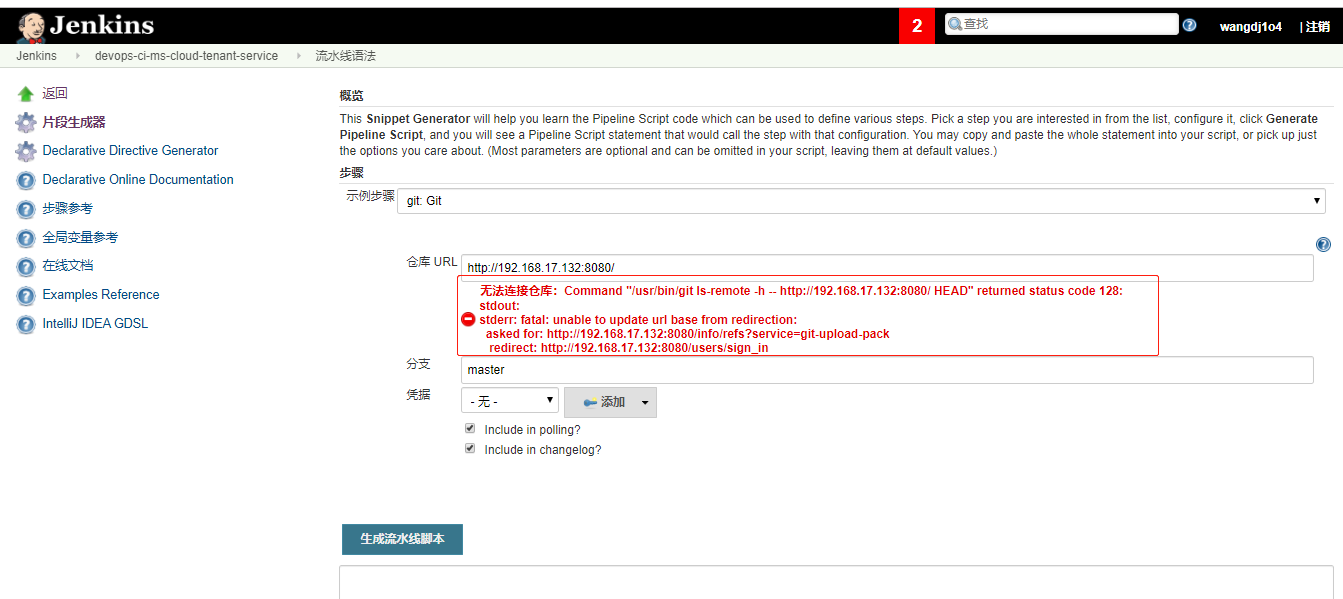

配置jenkins的credentialsId 即生产连接gitlab、harbor的credentialsId

出错了

成功的图

可能原因分析: https://blog.csdn.net/wudinaniya/article/details/97921383

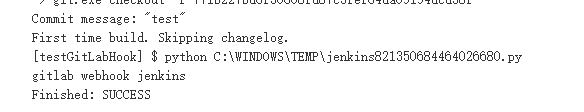

利用GitLab webhook来触发Jenkins构建 参考自:https://www.cnblogs.com/zblade/p/9480366.html

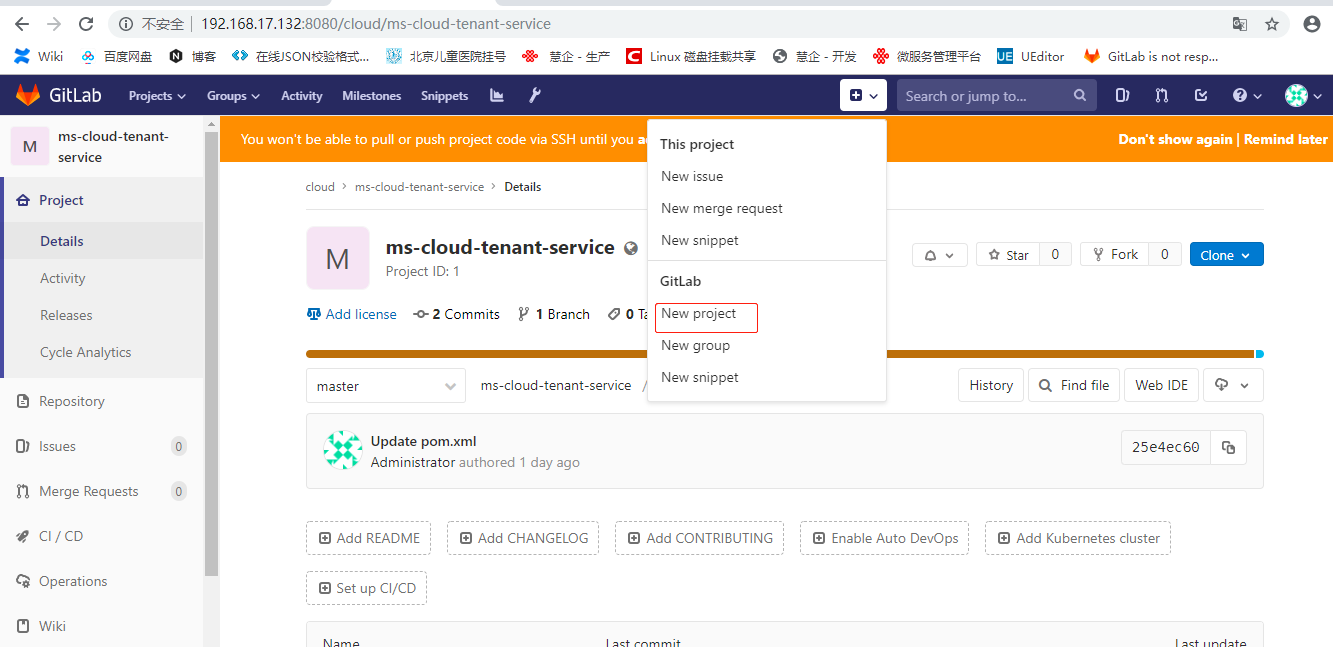

新建GitLab测试项目 进入个人GitLab账号,在右上角的加号中,选出GitLab 的 New Project,可以新建个人的GitLab工程:

其余都走默认的设置,填写好project的名字,就可以创建一个新的project,如图:

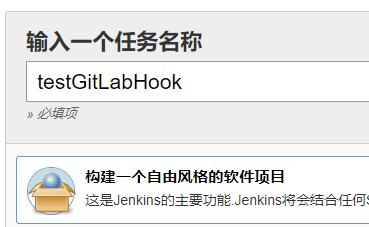

新建Jenkins的job (1)首先验证是否安装了GitLab plugin

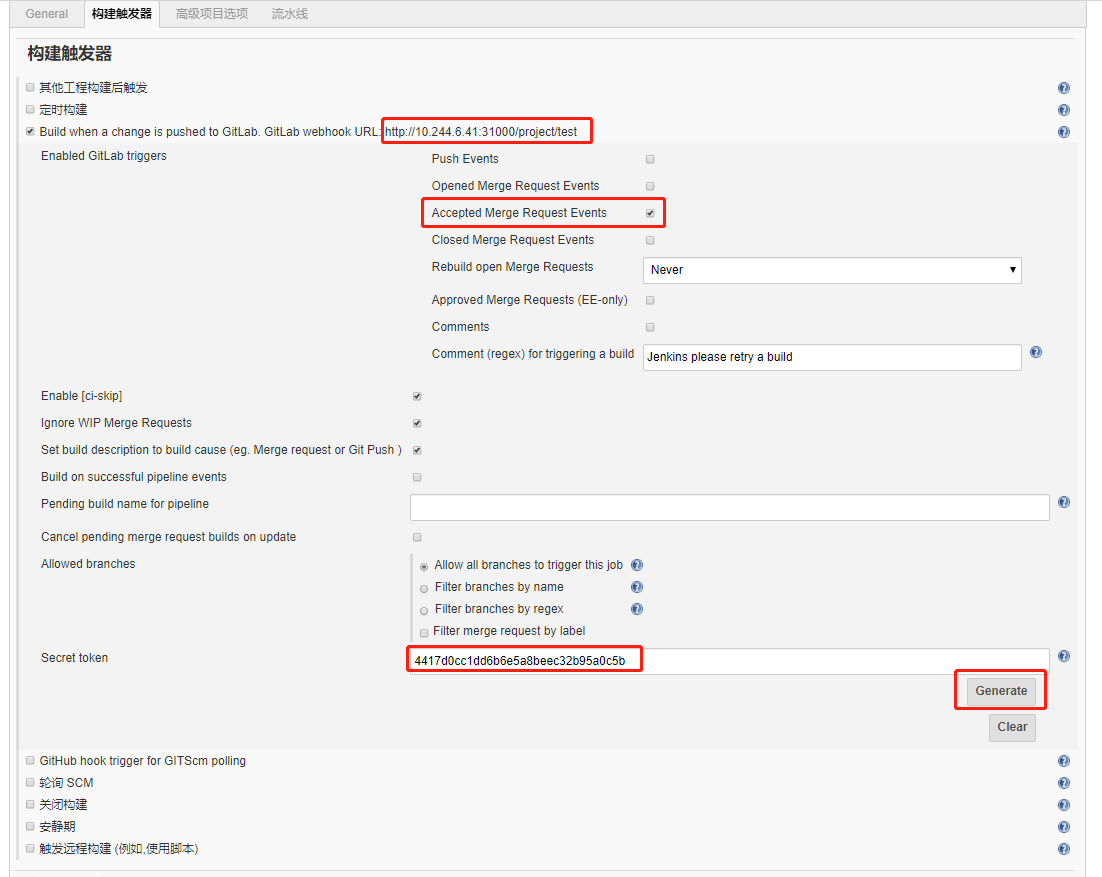

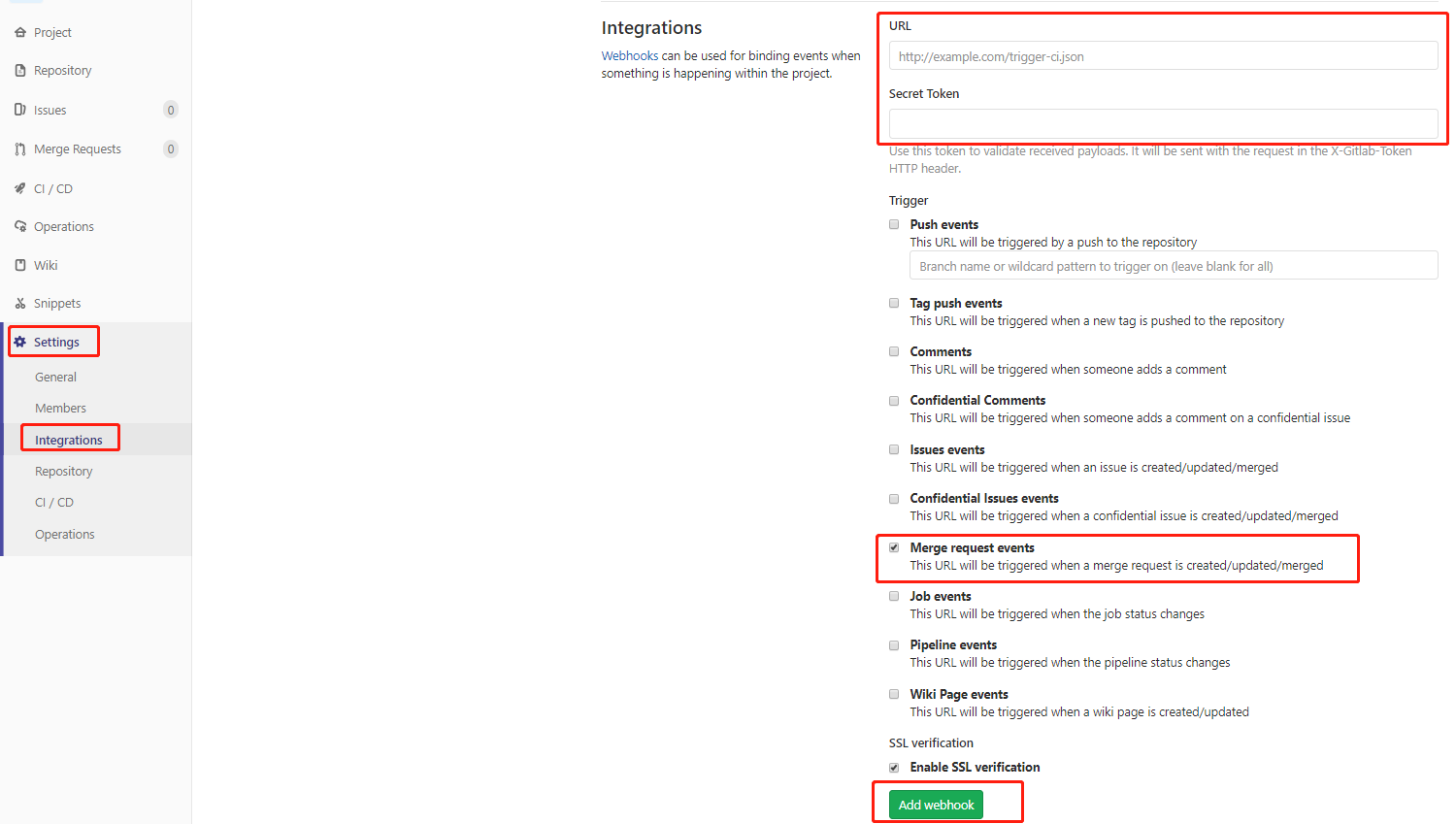

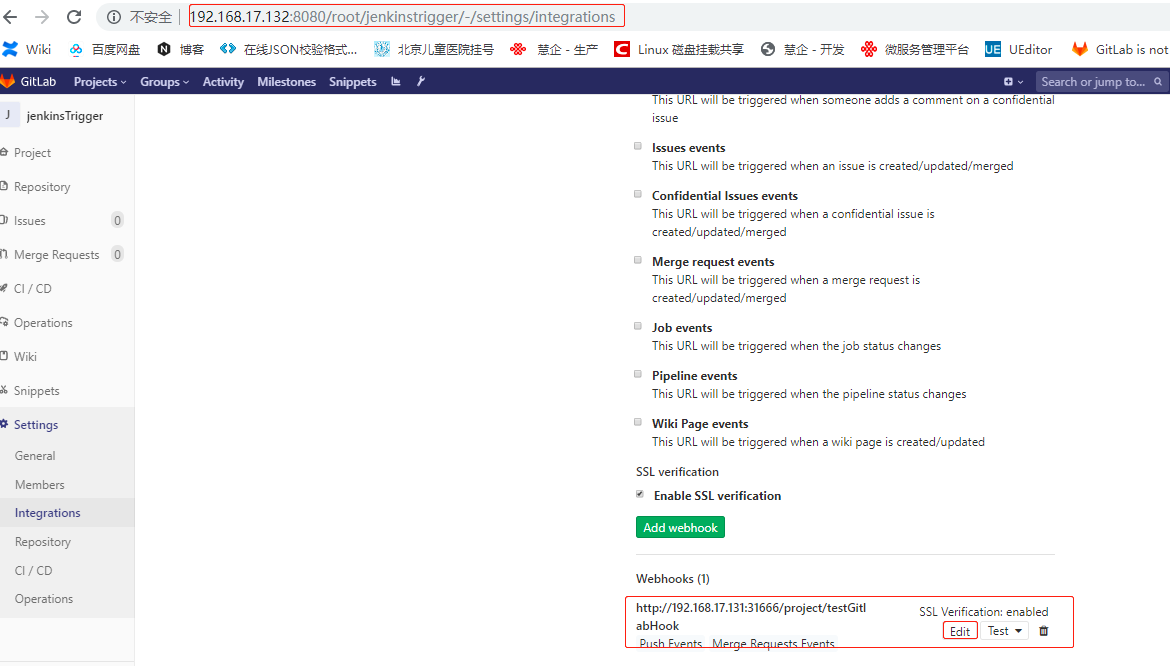

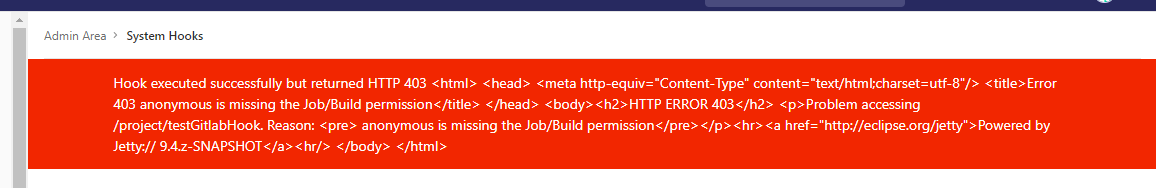

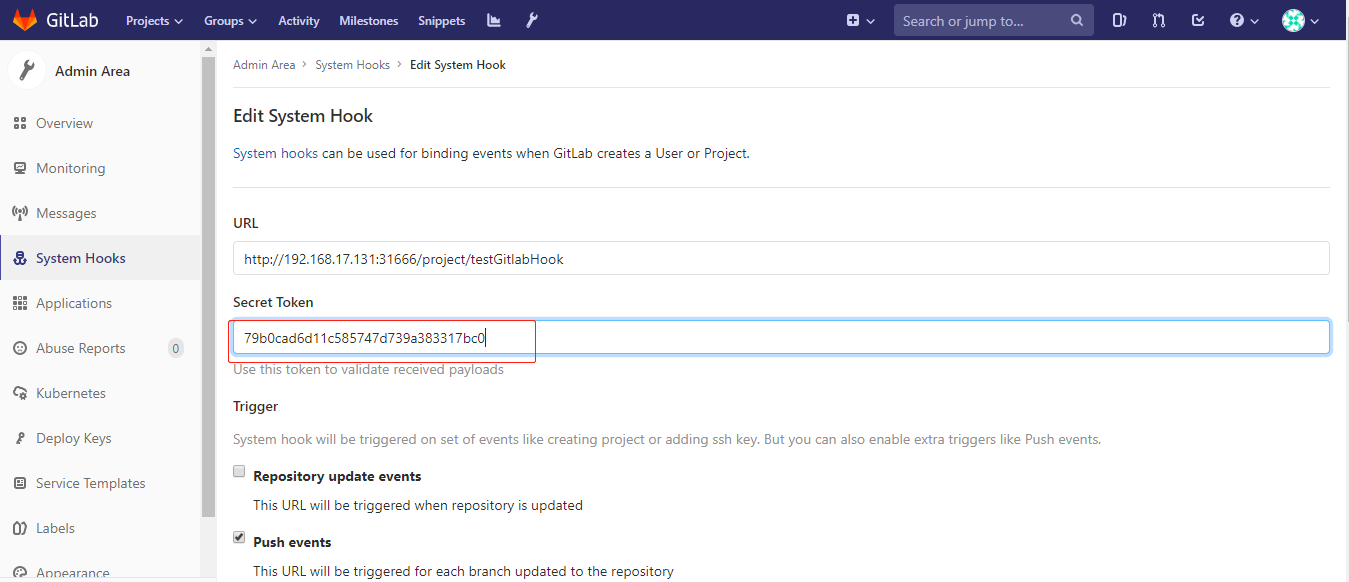

设置GitLab的webhook 在gitlab的当前工程->settings->integretions,使用当前工程owner角色的账号制作钩子。将上一步中的url和token填入,选择merge事件。当owner进行合并代码时自动触发jenkins构建操作。

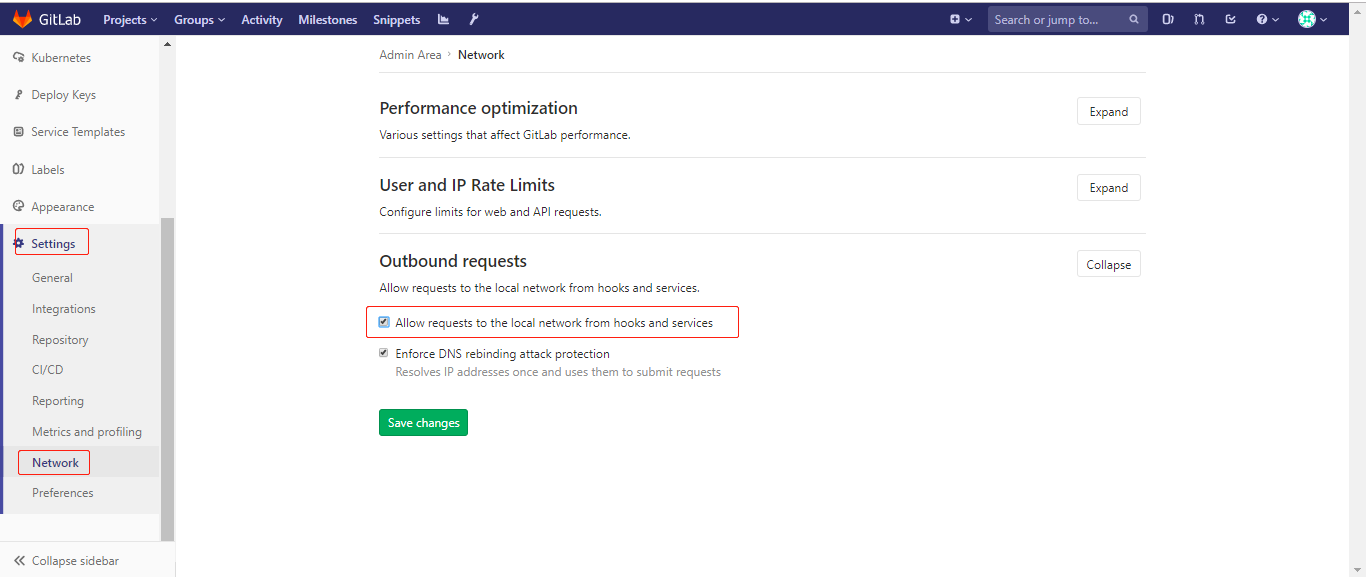

点击Add webhook后,可能会报Url is blocked: Requests to the local network are not allowed

错误原因:

解决方案:

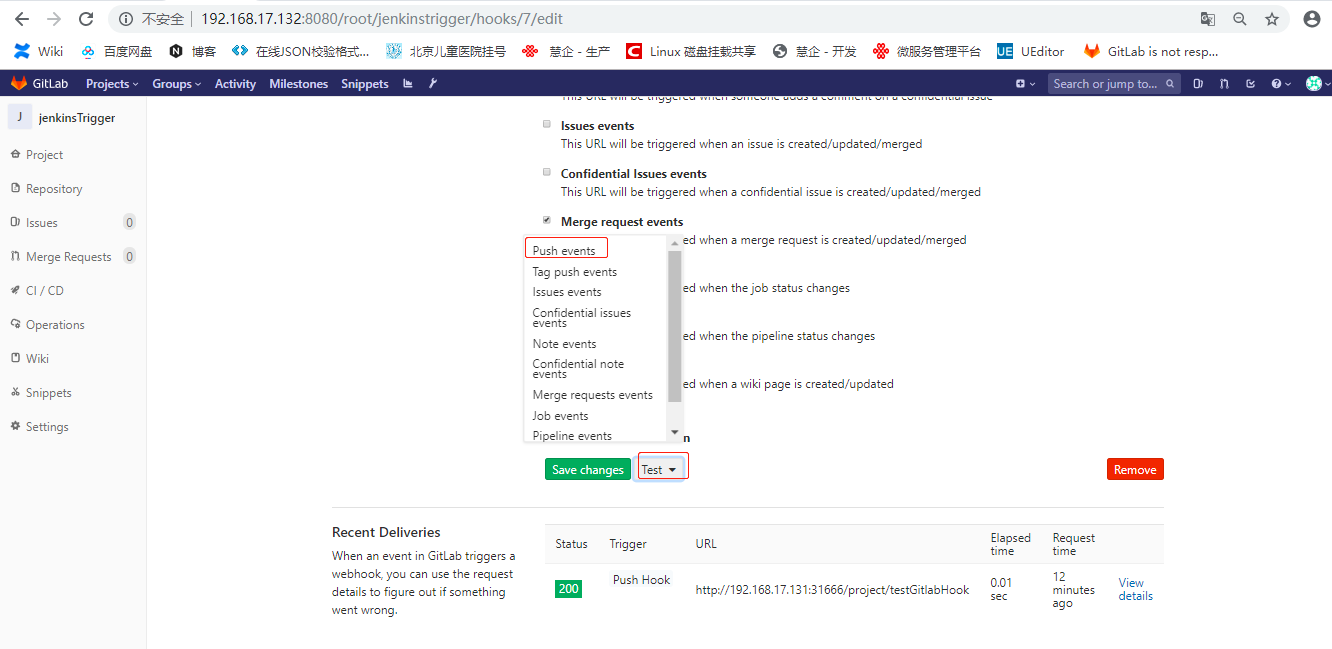

点击save changes后,再次回到当前工程->settings->integretions中重新制作钩子,然后点击Edit:

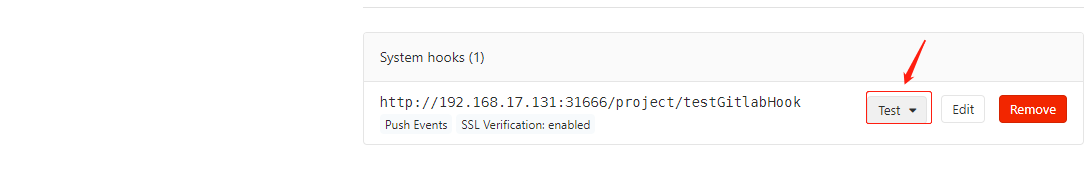

点击Test->Push events:

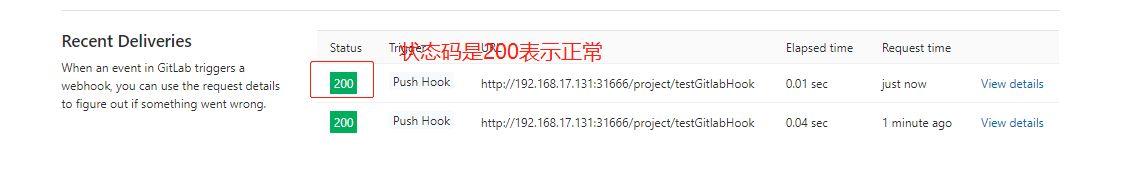

查看事件状态,若为200则钩子制作成功:

可能出现的其它问题 (1)不允许匿名构建问题

出现上述问题,原因就是不支持匿名build,回到jenkins中,在 系统管理->全局安全管理中,勾选匿名用户具有可读权限 如图:

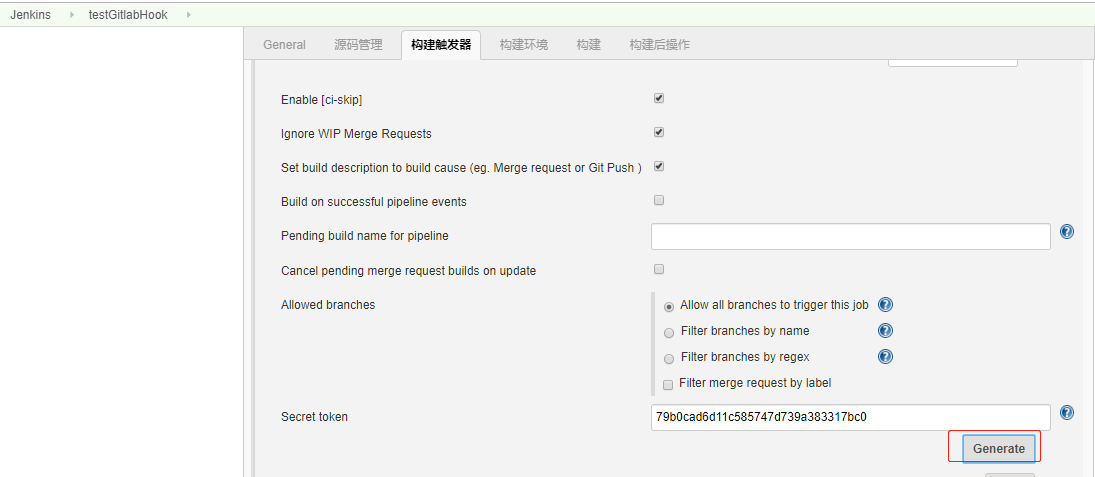

然后点击应用和保存, 回到GitLab,继续测试.如果继续报该错,则进入刚刚构建的工程,点击构建触发器中选中的Build When a change is pushed右下角的高级选项,有一个Secret token,点击Generate,会生成一个安全代码:

复制到webhook中的url下面:

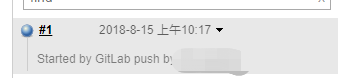

然后保存,再测试,就可以通过,这时候会触发jenkins执行一次操作:

看看控制台输出:

参考自https://www.cnblogs.com/zblade/p/9480366.html

附录 遇到的问题 (1)集群中的每个节点不能直接拉取公有harbor上的镜像

解决方案:必须把所有的镜像都拉到所有节点的本地?不是的,需要把harbor仓库的rke项目设为公有仓库。

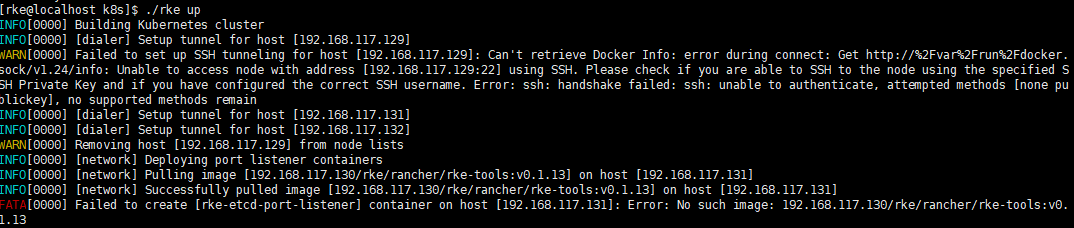

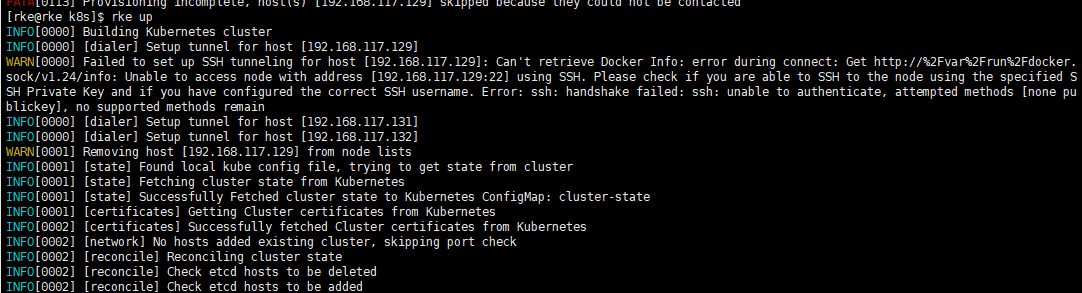

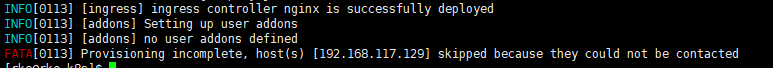

(2)在129机器上运行./rke up 出现下图问题

解决方案:129机器没有自己对自己做免密

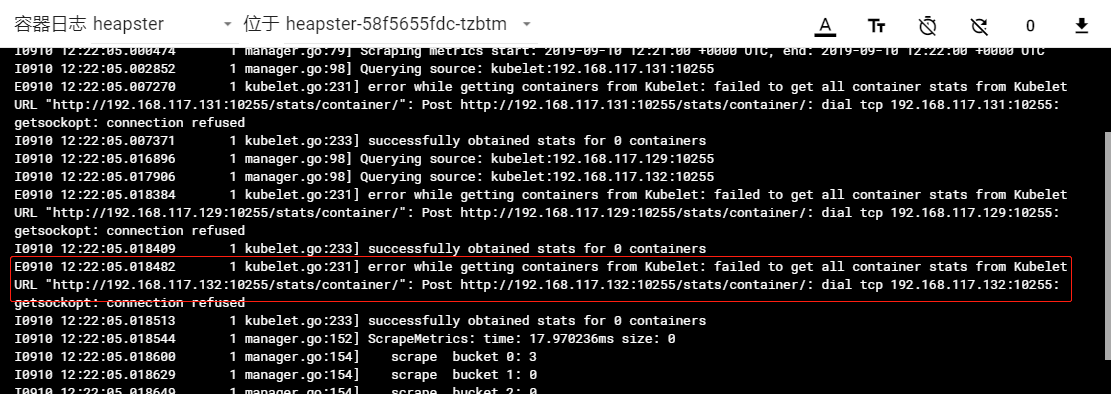

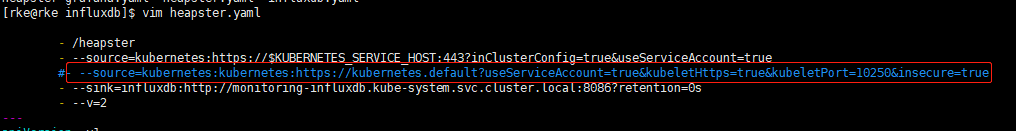

(3)dashboard页面没有图形化的统计数据

解决方案:

把–source改为图中红框内的配置。

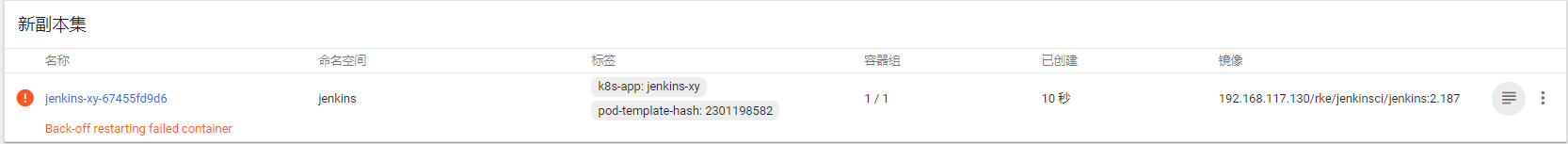

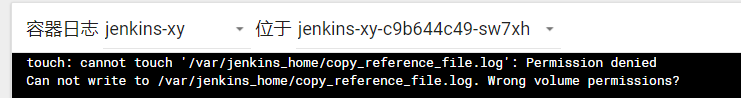

(4)jenkins-master在192.168.17.131起不来

在131机器上创建目录mkdir /jenkins-data 并修改权限chown -R 1000:1000 /jenkins-data